ECE faculty design chips for efficient and accessible AI

Artificial intelligence (AI) has infiltrated nearly every aspect of modern society. Behind the sleek interfaces of ChatGPT, Alexa, and Ring doorbells are machine learning algorithms that crank through unprecedented amounts of data to deliver answers. The demand for AI at consumers’ fingertips is fueling new hardware solutions to support those applications.

“Machine learning has evolved in the last 10 years to become one of the biggest, most important workloads of integrated circuit design,” said Prof. Zhengya Zhang.

As a result, creative new solutions are required to serve the higher computing demands of AI – solutions that are coming from ECE chip designers.

From data centers to edge computing for IoT

Since about 1960, integrated circuit designers have been able to provide more computing power by doubling the number of transistors about every two years. This observed pattern, known as Moore’s Law, is no longer sustainable. Instead, additional processing power often comes from high numbers of computers run simultaneously as a network, grouped in clusters or server farms. The financial and environmental cost of these computing systems is enormous.

For example, the now-outdated Chat GPT 3.5 was estimated to cost OpenAI $700,000 daily. Most of these large models are run on the cloud, a familiar term for commercial data centers around the world with banks of high-power, remote computers. These data centers require a large, continuous power supply that can only be met with fossil fuels or nuclear power.

“There’s this huge problem that machine learning is taking too much power,” said Michael P. Flynn, Fawwaz T. Ulaby Collegiate Professor of ECE. “We’re seeing that now really starting to occur on a global scale, with data centers taking obscene amounts of electricity. A data center now takes a hundred megawatts of power—so it needs a medium-sized power station. The electricity consumption in the U.S. had been dropping until this year, because now those data centers are starting to cause a problem.”

Machine learning has evolved in the last 10 years to become one of the biggest, most important workloads of integrated circuit design.

Zhengya Zhang

There is also a demand for specialized processors to implement and accelerate machine learning in small, low-power devices. The “smart” devices that are increasingly making our lives more convenient are linked together by wireless signals into an accessible network called the Internet of Things (IoT). Within the IoT, edge computing brings the computational work closer to the place where sensors or other devices are gathering the data. The benefits of edge computing include faster response times, improved efficiency, and enhanced security—all while reducing network traffic.

Edge computing has the ability to transform applications in traffic control, healthcare, supply chain industries, agriculture, and more. Examples include streaming and processing real-time data from medical devices, detecting traffic accidents, alerting museum employees to concealed weapons, or monitoring water and soil at a farm. It can be difficult to seamlessly implement machine learning at the edge due to energy, size, and workload constraints, often leading industry researchers to sacrifice some aspect of power or efficiency.

A diverse, collaborative group of ECE researchers are working to combat these challenges and enable efficient, sustainable, and accessible AI.

“This is a two-way path,” added Flynn. “A lot of the research at Michigan is using integrated circuits to make efficient AI and machine learning possible. But there’s also going to be an impact from machine learning being used for better integrated circuit design.”

Say goodbye to power cords and batteries

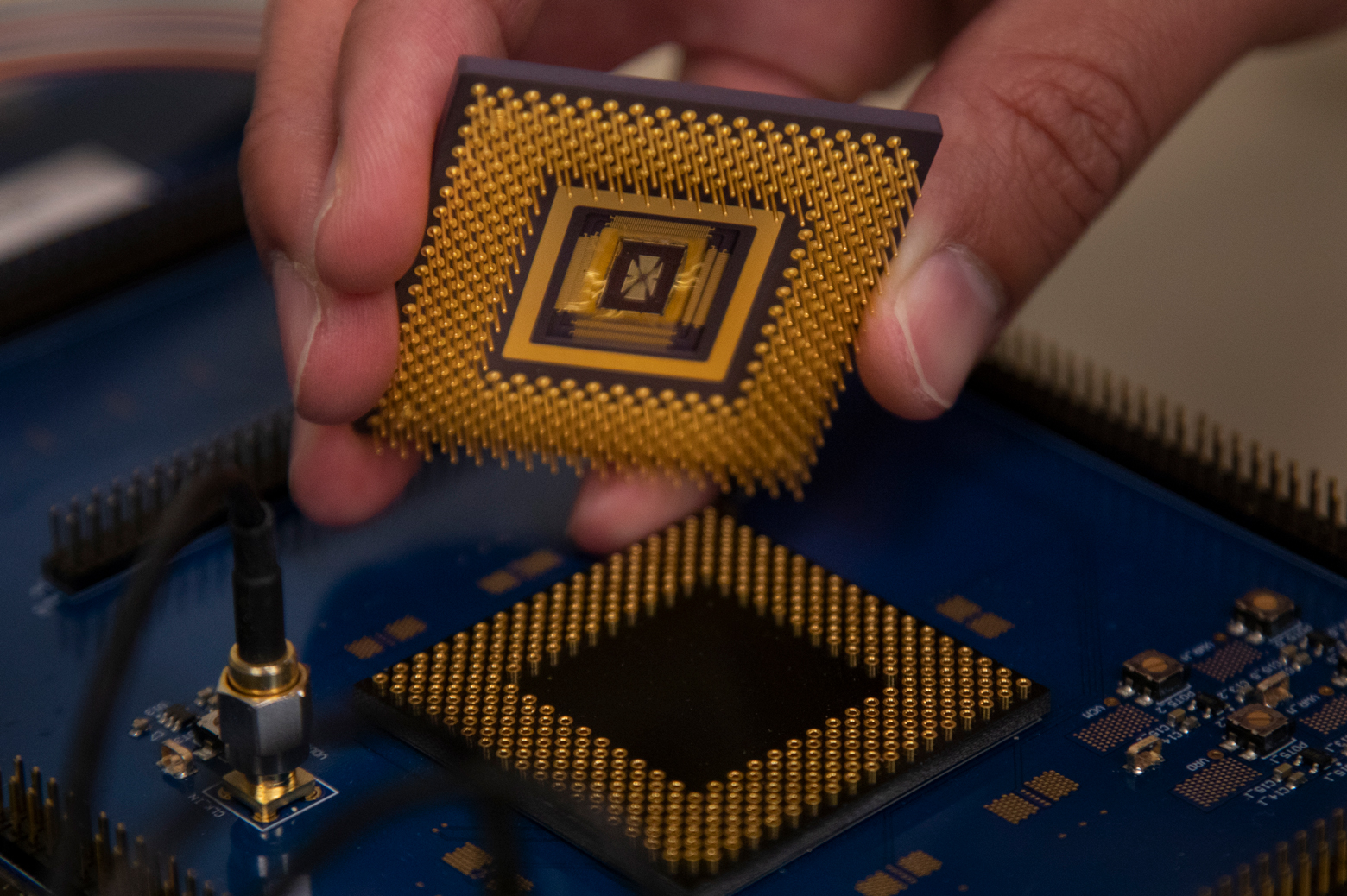

With the slowing of Moore’s Law, researchers shifted from building general, all-purpose processors to domain-specific processors like graphics processing units (GPUs). Profits of companies like NVIDIA have skyrocketed due to their popular GPUs and their ability to run large calculations on their cloud facilities. However, running big machine learning algorithms on GPU clusters isn’t the most elegant solution.

“You can build custom silicon chips that are better at executing the types of operations that machine learning requires,” said Dennis Sylvester, Edward S. Davidson Collegiate Professor of Electrical and Computer Engineering. “People found a really killer application for building custom circuits, as opposed to the general purpose ones we all used to work on.”

These custom chips, called application specific integrated circuits (ASICs), have been used in research and industry since the 1960’s, but they now empower researchers to focus on machine learning implementations.

For example, in collaboration with David Blaauw, the Kensall D. Wise Collegiate Professor of EECS, and Hun-Seok Kim, the Samuel H. Fuller Early Career Professor of ECE, Sylvester is developing ASICs that integrate with sensors to allow devices like voice activated speakers to perform intelligent tasks. Historically, users have plugged these speakers into power outlets to sustain the power required for these AI features. For example, Amazon Alexa, Apple’s Siri, and Google Assistant are constantly “listening” for commands so that they can respond instantly to any request—a feature that consumes a low level of “background” power. The team’s chips interface with an audio sensor to reduce the background power needed to run the voice activation, so that the devices no longer require a power outlet.

“From the microphone all the way through the sensor interface and data converter and the back end, through the deep learning layers of the machine learning algorithm, we optimized the whole system,” Sylvester said. “This little system that continuously consumes a microwatt, hears the name Alexa or one of our keywords and wakes up another system that would be in a very deep, low-power state. Then it would be ready to do natural language processing, answer queries, or anything more complex.”

This isn’t the only project focusing on keyword recognition for triggering additional AI functions in energy-constrained devices like smart speakers. Prof. David Wentzloff led a collaboration with Sylvester, Blaauw, and research scientist Mehdi Saligane to design a system-on-chip (SoC) for an ultra low power wake word application. A SoC is a chip with multiple components in addition to the central processor, such as timers, power management, or sensors. Designing an SoC for this purpose was the first aim of the Fully-Autonomous SoC Synthesis using Customizable Cell-Based Synthesizable Analog Circuits (FASoC) program.

“For this project [FASoC], we leveraged machine learning, but the goal was to reduce the power consumption of the device without compromising its capability,” explained Wentzloff. “So it’s still very capable. It can still do some very impressive stuff in speech recognition, but it can do so very efficiently by leveraging the ML algorithms.”

Ambiq (previously Ambiq Micro), co-founded by Blaauw, Sylvester, and alum Scott Hanson (BSE MSE PhD EE ‘04 ‘06 ‘09) in 2009, markets this type of ultra low power chip for the next generation of wireless, intelligent edge devices. Ambiq chips have already been deployed in devices like smart watches and rings that monitor aspects of health and fitness.

A second company, CubeWorks, co-founded by Blaauw, Sylvester, Wentzloff, alum Yoonmyung Lee (MSE PhD EE ‘08 ‘12), and former faculty member Prabal Dutta (now at UC Berkeley) in 2014, develops and sells tiny wireless sensors with batteries lasting up to five years. CubeWorks sensors have been instrumental in monitoring the temperature of temperature-sensitive healthcare supplies like vaccines, and they are easily deployed in discrete security systems.

Some of these technologies enable unprecedented flexibility and stability for users, by replacing power cords with batteries. But ultimately, Wentzloff aims to reduce the power consumption of edge devices enough to eliminate their need for power outlets or batteries.

There’s a big environmental aspect of this work. If we enable a world where there’s a trillion batteries out there, I feel like we failed as engineers.

David Wentzloff

“How many batteries do you want to change every day, or recharge? Probably zero; you’re not going to recharge 100 batteries every day. So that’s already limiting how many things individual consumers would buy,” he said, “but we’re a small part of the market. The larger parts of the market are smart cities, industry, food and beverage, or asset tracking. Think of every FedEx and UPS and USPS package out there today. There’s billions of these things. So this is where the scale happens, and the scale is limited by batteries.”

How do researchers make a device that doesn’t need an outlet or battery? Energy can be harvested from renewable sources such as light, heat, vibration—even less common sources such as audio, radio frequency, or decomposition (bio-harvesting). That harvested energy can be stored in sustainable alternatives to batteries called capacitors, to be used when the renewable energy source isn’t available, such as a cloudy day or at night.

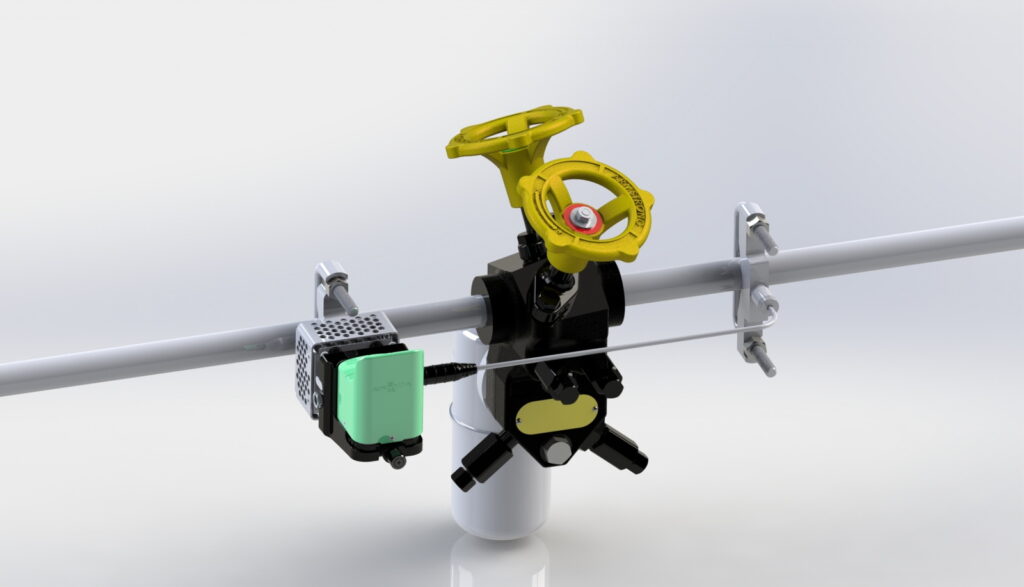

Wentzloff co-founded Everactive with colleague Benton Calhoun of the University of Virginia in 2012 to develop these battery-free sensors for industry. Their first product harvests heat energy to monitor steam traps, allowing facilities managers to keep their buildings safe and efficient without constantly performing manual maintenance checks and changing batteries. Another product uses a network of wireless, batteryless sensors to monitor industrial equipment to help companies avoid and address broken machinery. These devices can run on energy harvested from heat, vibration, or light.

“You know, when you start looking through a lens of, ‘Hey, I can’t use a battery. Where do I have to find energy?’ a lot of things start to look like sources of power,” Wentzloff said, “There’s a big environmental aspect of this work. If we enable a world where there’s a trillion batteries out there, I feel like we failed as engineers.”

A shift toward memory-centric computing

As AI becomes a growing demand in consumers’ everyday lives, and researchers work to meet this need while offsetting its enormous power draw, a huge bottleneck to achieving these goals is memory capacity. The datasets analyzed by machine learning algorithms are larger than ever, but they also include a lot of “non-data” as zeroes or missing values. It costs time and energy to increment through each of these meaningless data points, and it’s an inefficient use of memory storage.

“In a lot of cases, the performance of the system is limited by the memory rather than the computing itself,” said Wei Lu, the James R. Mellor Professor of Engineering. “That actually has a big impact on research on integrated circuits because previously, the focus was to make the circuits faster. And now the focus is to design circuits that support the memory access. That’s a big paradigm shift.”

Lu specializes in designing improved memory architectures and modules for integrated circuits. For machine learning applications, his work reduces the movement and storage of the data through the model. A machine learning model like a neural network consists of progressively finer-grained layers, which filter the input data to calculate an answer. In a conventional system, the output data from each layer are stored before moving as the input data to the next layer. In a memory-centric architecture, the data move through each layer without being stored, maximizing the system’s efficiency and performance.

“It’s really an enabler for a lot of applications at the edge,” Lu said, “where you have power, speed, thermal, and cost constraints. It’s lower latency, more secure, and personalized, compared to running these models on the cloud.

Previously, the focus was to make the circuits faster. And now the focus is to design circuits that support the memory access. That’s a big paradigm shift.

Wei Lu

One of the most groundbreaking memory applications that Lu has developed is called resistive ram (i.e., RRAM or ReRam), which takes advantage of components called memristors working in parallel to increase computer performance. Memristors store information as resistance in addition to performing computing functions, allowing for energy and space conservation on a computer chip.

Crossbar, a company co-founded by Lu and ECE alum Sung Hyun Jo (PhD EE ‘10) in 2010, first announced their working RRAM prototype in 20 13, promising faster and more powerful mobile devices. Since then, Lu and colleagues have used their memristors to improve the learning speed of a reservoir computing neural network and integrated memristors into a memory processing unit (MPU) that converts the analog signal to digital for accelerating a traditional digital computer.

Lu and Zhang have marketed the MPU technology through their startup company MemryX, launched in 2019. MemryX products promise high performance, low latency, and low power, making them a practical solution for AI integration in devices from phones to robots to virtual reality headsets, and more.

Another way to improve both the capacity and energy efficiency of memory modules is to combine computation and storage functions in a circuit. RRAM is perfect for these architectures, called compute-in-memory, which eliminate the time and energy costs of transporting data between the memory and computation components of an integrated circuit. In addition, there are many other ways to successfully execute compute-in-memory and do memory-centric computing.

“The previous architectures were like spending a long time driving on the highway to the grocery store, spending very little time buying one thing, and driving all the way back home. That time and energy is wasted,” said Blaauw. “Instead, we bring the grocery store to where you live.”

“We have to fundamentally rethink our architectures and make them more memory-centric, and have very efficient compute as well,” added CSE Prof. Reetuparna Das. “This new perspective goes in the completely opposite direction of conventional wisdom.”

Das, Sylvester, and Blaauw are collaborating on a way to convert SRAM memory structures into parallel processing units, allowing convolutional neural networks to be run on conventional chips with small modifications. This makes the system much more energy efficient, but there are additional, lesser known benefits.

“One big advantage of this technique, which we call Neural Cache, is area,” said Das. “Since we are reusing a lot of the memory structures to do compute, you suddenly save a lot of silicon area. All of that area can be used to create more memory, so you can store more machine learning parameters on chip and all sorts of advantages start opening up.”

Chips and computers inspired by the brain

The human brain is incredibly efficient at processing sensory information. Humans can automatically locate the direction of a sound, quickly correct balance, and compose images through the visual system in real time. All of these processes and more occur simultaneously in the brain, while consuming only 17–20 watts of power—with only about 3 Watts driving communication and calculation functions [source]. In contrast, the world’s fastest supercomputer, Frontier, located at Oak Ridge National Laboratory in Tennessee, uses 27 megawatts to achieve a similar level of processing.

Many researchers attribute the brain’s efficiency to the analog nature of its processing and communication. Analog computing emerged and gained popularity during World War II, where it was used to estimate the path of falling bombs, fire battleship guns, and decode encrypted messages. In the 1950s–60s, analog computing was overtaken by digital computing, which was faster for all-purpose tasks.

Now, researchers are rethinking the potential of analog computing for AI tasks. For example, analog integrated circuits are well-suited to quickly performing specific tasks, such as running a machine learning model with low power consumption.

Flynn, Lu, and Zhang are using the brain as inspiration to perform machine learning tasks in edge devices. “The idea is that brains use analog neural networks and they’re super efficient. We’ve built circuits that do sort of what the brain does for image processing,” said Flynn.

These types of integrated circuits can be used in computer vision demonstrations, coordinating 50 cameras that deliver real-time object detections. The system will analyze 30 frames per second while consuming under 10 watts of power—a useful model for applications like security cameras, monitoring warehouse floors, and navigating automated vehicles.

“I see this work as two stages: memory-centric computing and bio-inspired computing,” added Lu. “There are features in biology that are beyond just processing data in memory, that are actually using the device and network dynamics to perform computation.”

The team has integrated the memristor-based RRAM technology spearheaded by Lu into memory devices, including the first standalone programmable memristor computer. To do this, Zhang and Flynn designed an integrated circuit that contained an array of Lu’s memristors, a digital processor, and digital/analog converters to translate the analog data from the memristors into a digital signal for the digital processor.

“The hope is that going back to analog computing can help alleviate the huge power load that machine learning has created,” added Flynn. “There’s this promise out there that analog computing could make a big difference, so that’s something we’ve really been working on.”

Lu’s work developing tunable memory technologies was also crucial for further development of brain-inspired technologies. By adjusting the resistance of a network of linked memristors by moving lithium ions through the system, his team could model the activity of neural pathways in the brain.

Newer work allows the team to integrate the time-sensitivity of the brain’s neuronal model as well—just like neurons returning to baseline voltage after firing, the resistance of the memristors “relaxes” back to an increased baseline resistance after stimulation. This technology will allow machine learning models to efficiently process time-dependent data like audio or video files.

“Neuroscientists have argued that competition and cooperation behaviors among synapses are very important. Our memristive devices allow us to implement a faithful model of these behaviors in a solid-state system,” said Lu.

Prof. Robert Dick studies the structure and learning processes of neural networks to improve the efficiency of AI in embedded systems like phones and wearable devices. Ideally, he says, these devices should be context-aware so that they can be ready to help you when you need them.

“Imagine you’ve got a fairly knowledgeable friend with you, who is paying attention to what’s going on around you,” Dick said, “and there’s some event that happens where you need some help. You don’t have to spend 10 minutes explaining to them what’s been going on. You just ask for help, and they know what to do. That’s what AI-enabled edge devices should be like.”

Achieving this is a big challenge, due to the energy required to constantly process data like audio or images. The machine learning algorithms and the integrated circuits must improve together, becoming better matched to support each other while using less energy.

The hope is that going back to analog computing can help alleviate the huge power load that machine learning has created.

Mike Flynn

The most accurate machine learning algorithms could have thousands or millions of filters for the data, or “neurons,” so one way to reduce their energy use is called pruning the network, or removing connections. The brain prunes connections too, especially during child and adolescent development. Unneeded, redundant, or infrequently used pathways for information flow in the human brain are atrophied or altogether removed, making its communication and processing much more efficient in adulthood. In a similar manner, researchers like Dick can remove connections from a machine learning algorithm to increase speed and efficiency.

“You can rip out chunks of the network,” Dick said, “And then you can run this thing on less hardware with less memory. The problem is, if you do this in a straightforward way, you start to lose accuracy pretty quickly. When you simply rip out about half of the network, it starts to make mistakes. We found that if you make the filters orthonormal, so they don’t overlap with each other, you can rip out about 90% of the network before the accuracy starts to drop much. You get the same results with a lot less energy, time, or hardware.”

Another of Dick’s projects modeled a computer vision system off of the human biological vision system. While classic computer vision models took a high resolution image of an entire scene and analyzed the whole thing, the human vision system is mainly low resolution, with a small area of high resolution focus.

“It’s this multi-round adaptive system where you capture an image of your environment that’s super low resolution except in a tiny area, and then you determine whether you have enough information to make your decision accurately. If you do, you can stop now and not burn any more energy. If it’s not, then you capture again and add to the information you already gathered,” Dick said. “So we basically just copied the biological system in a resource-constrained embedded system, which kept accuracy about the same but cut the energy consumption and time down to a fifth.”

Novel approaches to computing such as these may be further documented in publications like the brand new open-access journal called npj: Unconventional Computing, which is part of the Nature portfolio of journals. The journal’s first issue focuses on brain-inspired computing, and Lu serves as its editor-in-chief.

The Lego blocks of computing

The speedy evolution of AI techniques and applications has made it nearly impossible for integrated circuit designers to keep up.

“The models update every few months,” said Zhang. “IC design usually takes one or two years—so by the time we’re done, the model has already evolved a couple of generations forward, rendering our design ineffective. So how do we actually evolve our hardware design and IC design to catch up with the fast pace of the evolution of machine learning?”

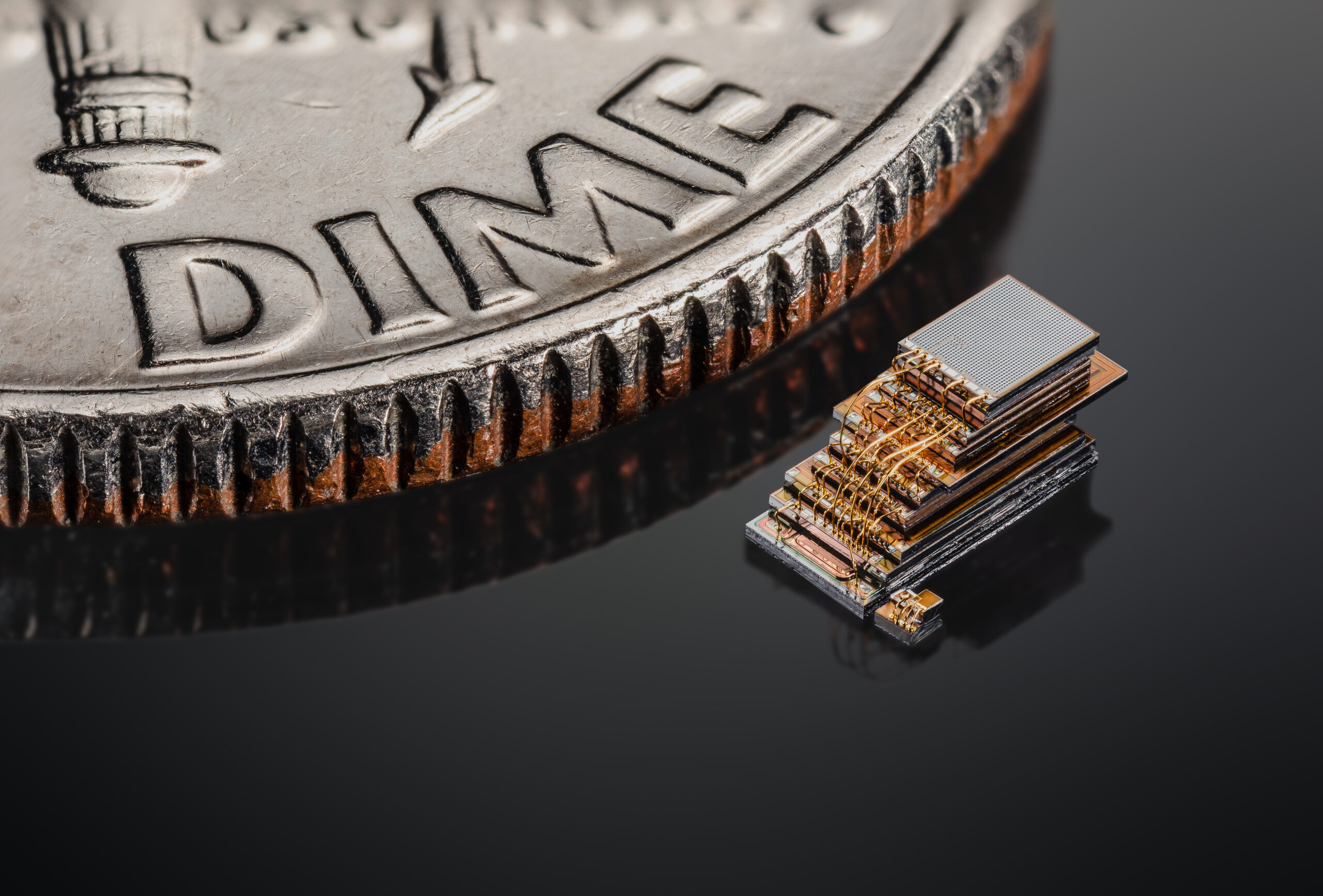

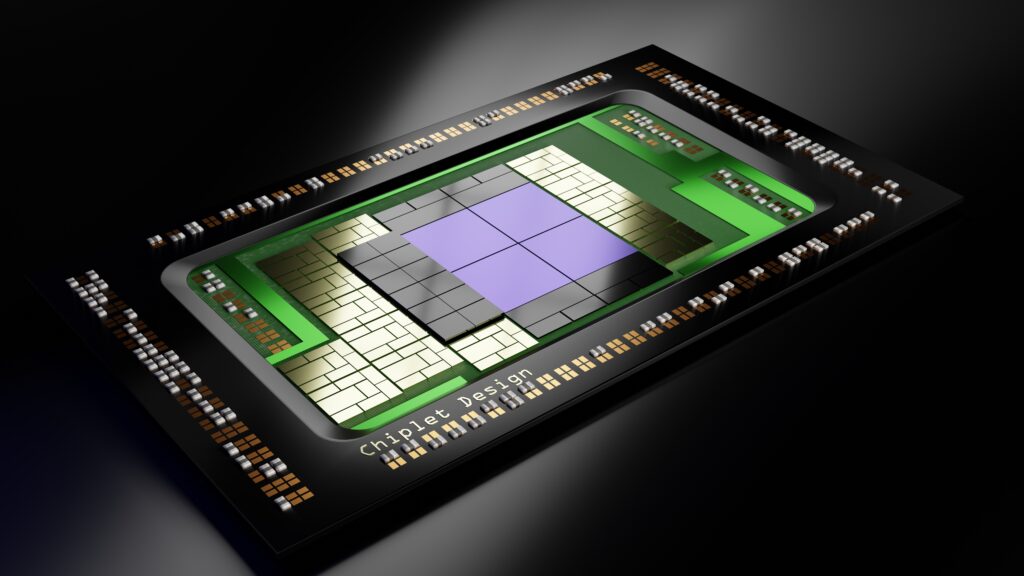

Zhang, Lu, and Flynn are working on a flexible solution that allows them to adapt and update integrated circuit designs as the models progress. Instead of designing large, fixed-use circuits, they silo the functions of an integrated circuit into subsystems called “chiplets.” The chiplets may then be integrated like Lego blocks into a larger design and adjusted, reused, and reprogrammed as needed. This versatile system involves special packaging for the chiplets, allowing them to communicate, interface, and pass data around efficiently like a single chip.

Due to the structure of the chiplets, they have a large number of shorter connections, making them faster, higher bandwidth, and more tightly integrated than traditional chips with multiple memory components. Lu works on compute-in-memory chiplets, bringing his improved memory architectures to the chiplet model.

“All the major companies use very high density memory chips that integrate very tightly with the logic,” he said, “The chiplets are one step further than that. You can not only have more efficient memory modules, but memory chiplets that both store and consume data locally.”

The University of Michigan team was contracted by the DARPA CHIPS Program to design and produce chiplets, along with industry developers Intel, Ferric, Jariet Technologies, Micron Technology, and Synopsys.

Using AI to design circuits

Designing and building the hardware to accelerate machine learning models is a major focus for ECE faculty. Another important aspect of their work involves using machine learning and other AI methods to aid in chip design.

The FASoC project, for example, has evolved into OpenFASoC, with the goal of creating an open-source tool for IC design researchers. Saligane has been a leader in the development of OpenFASoC, along with a team of researchers external to the University of Michigan, Google, and with continued input from Wentzloff, Sylvester, and Blaauw.

“The main objective for the FASoC project is to build open source tools that others can use to design chips, SoCs, that do whatever they want,” said Wentzloff. “IC design, especially analog or mixed-signal IC design, is really difficult. It takes highly skilled people to do it. Our tools are enabling more people to design ICs without necessarily having to learn all of the skills required.”

“This is an electronic design automation problem,” added Saligane. “We’re taking a pre-trained large language model framework and fine-tuning it with examples from GLayout FASoC to generate a large number of designs used as a training dataset.”

Using software like large language models to generate hardware designs allows researchers to automate a lot of the process—making it quicker and more accessible. In turn, the generated designs are used to train a new large language model to optimize a chip layout based on a set of requested parameters.

“It’s not ChatGPT per se, but it’s actually something similar to ChatGPT, where you can talk to it to refine your design.” Saligane explained. “So, in general, it allows you to improve the productivity of the designer.”

In a related project, Kim has developed a framework to identify the most efficient AI model for a dataset, rather than developing the models manually. “The objective is to not only automatically identify efficient models during training but also to tailor AI models for seamless integration with custom-designed VLSI processors,” he said.

When his team applied this generalized framework to image classification and large language models, they were able to identify AI models that required 3–4 times less hardware complexity, power usage, and memory footprint for edge devices such as smartphones. While researchers usually put in a lot of time, effort, and money into manually designing AI models that may not be the most efficient, this method allows them to more easily develop AI models well suited to their hardware.

Better algorithms to transform efficiency

While many of the ECE faculty focus on chip design approaches to facilitate AI applications, there are also a number of ECE faculty that take a different approach to these same applications, managing the data and algorithms rather than adjusting the hardware.

For example, Prof. Mingyan Liu, Alice L. Hunt Collegiate Professor of Engineering, and Prof. Lei Ying are faculty leads in a $20M NSF AI Institute for Future Edge Networks and Distributed Intelligence (AI-EDGE), led by The Ohio State University, that focuses on transforming the efficiency of edge device networks.

“Our interest is to design new network architecture and algorithms that can support future AI applications and distributed intelligence,” said Ying. “We envision we can move the intelligence from a centralized cloud center to distributed network edges.”

Through this work, the AI-EDGE collaboration will make AI more efficient, interactive, and private, for applications including intelligent transportation, remote healthcare, distributed robotics, and smart aerospace.

I’m excited to see, over the next 10 years, the strides we make in designing algorithms for hardware and designing hardware to support the algorithms.

Laura Balzano

In another project, Profs Qing Qu and P.C. Ku designed a wafer-thin chip-scale spectrometer that is suitable for wearable applications, like measuring sweat or other aspects of human health. Qu’s team incorporated machine learning into the device’s operation to efficiently decode the signal emitted from the detector and collect the data, allowing them to simplify the design, reduce the number of bulky photodetectors, and miniaturize the device. The team continues to improve the accuracy of the devices by further developing the machine learning algorithm to match the hardware and applying deep learning techniques.

Additionally, Prof. Laura Balzano’s research group focuses on implementation, to get the machine learning models to work more efficiently and seamlessly with existing hardware. This may involve computing with less data, more structured versions of the data, or parallel processing.

Balzano focuses on identifying structures in data that allow more efficient computation. This may require compression or subsampling of the data, with the goal of having a smaller dataset that retains the same patterns as the original dataset. As an example, one could sample the larger dataset randomly—but this is easier said than done. It can be difficult and inefficient to extract a random sample of the original data from hardware memory. She noted that the ideal hardware for certain algorithms may never exist, because the structure of the hardware may not match the structure of the algorithm. For example, performing random sampling from data in memory efficiently on an existing integrated circuit would be a breakthrough for machine learning efficiency.

“I’m excited to see, over the next 10 years, the strides we make in designing algorithms for hardware and designing hardware to support the algorithms,” Balzano said.

Prioritizing collaboration, access, and training

The advanced research that ECE faculty are working on to facilitate AI isn’t possible in a silo. Collaborations within the department, the College of Engineering, with other universities, and with industry partners are key to the success of this work.

“Collaboration is becoming so essential that it’s impossible to do our research without it,” Zhang emphasized.

“For me, the most exciting thing about this research is working with a team of students and pulling all these different things together into a demonstration,” said Flynn, “Graduate students are very smart, but don’t have as much experience—and the technology has gotten really, really complicated. So when you can pull it off, there’s a huge satisfaction that the students have done so much.”

For many researchers, sharing their findings widely and freely is critical to establishing these collaborations, maintaining consistent research progress, and training the next generation of engineers.

“To facilitate broader experimentation and integration within the research community, we have made our source code publicly available,” said Kim, the Samuel H. Fuller Early Career Professor of Electrical and Computer Engineering. “This allows fellow researchers to readily apply, test, and potentially adopt our approach in their own work.”

“When you do things open source,” added Saligane, “You lower the barrier of entry for new people, which allows students to take on hard projects, reproduce results, and easily learn from others.”

Even when doing closed source projects, Dick emphasized the importance of decentralizing knowledge around AI and machine learning. “These tools will be very powerful in the future,” he said. “So there’s a big question: is this power going to be in the hands of the vast majority of people? Or is it going to be tightly controlled by very few people? I want to make sure that as these systems become more capable that they are available to normal people and not monopolized and controlled by a very small number of people who are most focused on gathering power.”

Part of distributing new research information to the next generation of engineers happens through formal coursework. In addition to several foundational and introductory undergraduate courses in circuit design and machine learning, ECE faculty offer a range of graduate level courses in these areas.

These courses teach more applied research, provide a good opportunity to integrate things like machine learning into the lab components of these systems that students are creating and building.

David Wentzloff

For example, ECE offers a series of VLSI design courses to teach students the fundamentals of integrated circuit design, as well as a principles of machine learning course. Flynn teaches a graduate class on integrated analog/digital interface circuits, and Lu teaches a course on semiconductor devices that discusses advanced memory concepts. Kim teaches a popular graduate special topics course on VLSI for wireless communication and machine learning, perfect for students interested in this developing field. Dick also teaches a series of courses in embedded system design and research, and Balzano teaches a course on digital signal processing and analysis.

ECE also offers a relatively new set of electrical engineering systems design courses created for second and third year undergraduate students. “These courses teach more applied research, provide a good opportunity to integrate things like machine learning into the lab components of these systems that students are creating and building,” said Wentzloff.

Ku is teaching a new course on next generation computing hardware, incorporating discussions of futuristic computers and advanced computing as smart devices are integrated into users’ daily lives. The course will “explore various futuristic computing hardware, including quantum computing, biological or bio-inspired computing, optical computing, and distributed computing.”

As the curriculum further integrates topics related to AI acceleration, U-M ECE students will be learning from the best, gaining expertise spanning these key topics in integrated circuit design, machine learning, and signal processing.

ECE faculty are well equipped to make a difference in AI applications for everyday users through their collaborative work—bringing ultra low power circuits, state-of-the-art memory devices, and fine-tuned algorithms to the efficient, powerful, and intelligent devices of the future.

MENU

MENU