Hacking reality

Microphones that “hear” light; microprocessors that “tell” us secrets; self-driving cars that “see” fake objects; sensors that “feel” the wrong temperature. Our devices are under attack in new, increasingly sophisticated ways. Security researchers at CSE are exploring the limits of hardware and finding new, sobering vulnerabilities in our computers and homes.

Enlarge

Enlarge

Where does “computer” end, and “real world” begin? This line, separated so firmly in our minds by apps and user interfaces, is finer than it appears.

Our computers aren’t bounded by programs, but by physics: the electrons flowing between a microprocessor and memory; device ports and wireless receivers; microphones, wires, speakers, screens, and other channels along which signals travel or cross from one medium into another. While programmers often categorize hardware as externalities that they don’t have to worry about, their programs are ultimately running on real-world machines with real-world imperfections.

With the widespread adoption of smart speakers, security cameras and vision systems, and embedded systems, the distinction gets even hairier — computers are suddenly a lot more perceptive than we’re used to, and the edges between computer and reality become viable channels for input. In tandem with this, microprocessors themselves have turned increasingly to clever tricks to achieve better performance without considering the observable side effects that start to manifest in the real world.

With this complexity in function come new avenues for hacking attacks. In a hypothetical closed computer, walled off from real-world physics, the weak points are code and human behavior. Trick someone into downloading harmful script or sneak it through a safe application unnoticed: hack accomplished.

Now, consider a hacker who can: open your garage door by aiming a laser through your window; find out your passwords, bank info, and more just by measuring the time it takes to access memory; slam a self-driving car’s brakes with a laser; or turn the temperature up on an incubator with electromagnetic waves.

These scenarios and more have all been demonstrated by security researchers at the University of Michigan. The hacks are well beyond eventualities — they’re possible right now. And as we rely ever more deeply on pervasive autonomous systems, internet of things devices, and elaborate methods to speed up microprocessors, we’ll start to experience these hacks without some much-needed intervention.

“The security mechanisms we use were designed in the 60s,” says assistant professor Daniel Genkin. “So now, we need to catch up.”

Enlarge

Enlarge

Alexa and the laser pointer

In the realm of physical devices, smart home speakers like the Amazon Echo or Google Home present a classical example of that bridge between computer and reality: their only interface works by passively accepting audio from the environment. The shortcomings of this modality become apparent the moment a child decides to order something on Amazon without permission, needing only their voice and a parent’s PIN.

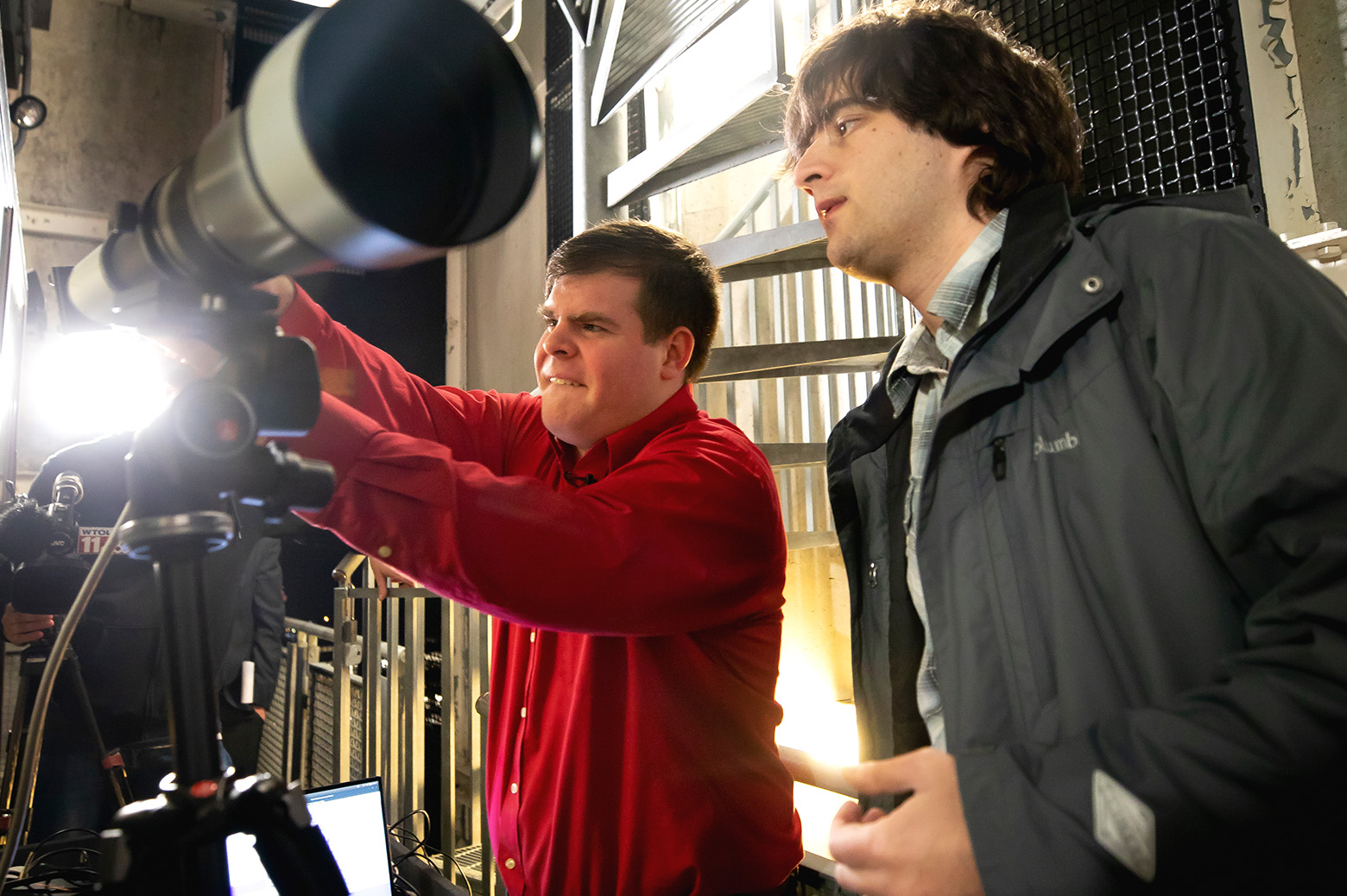

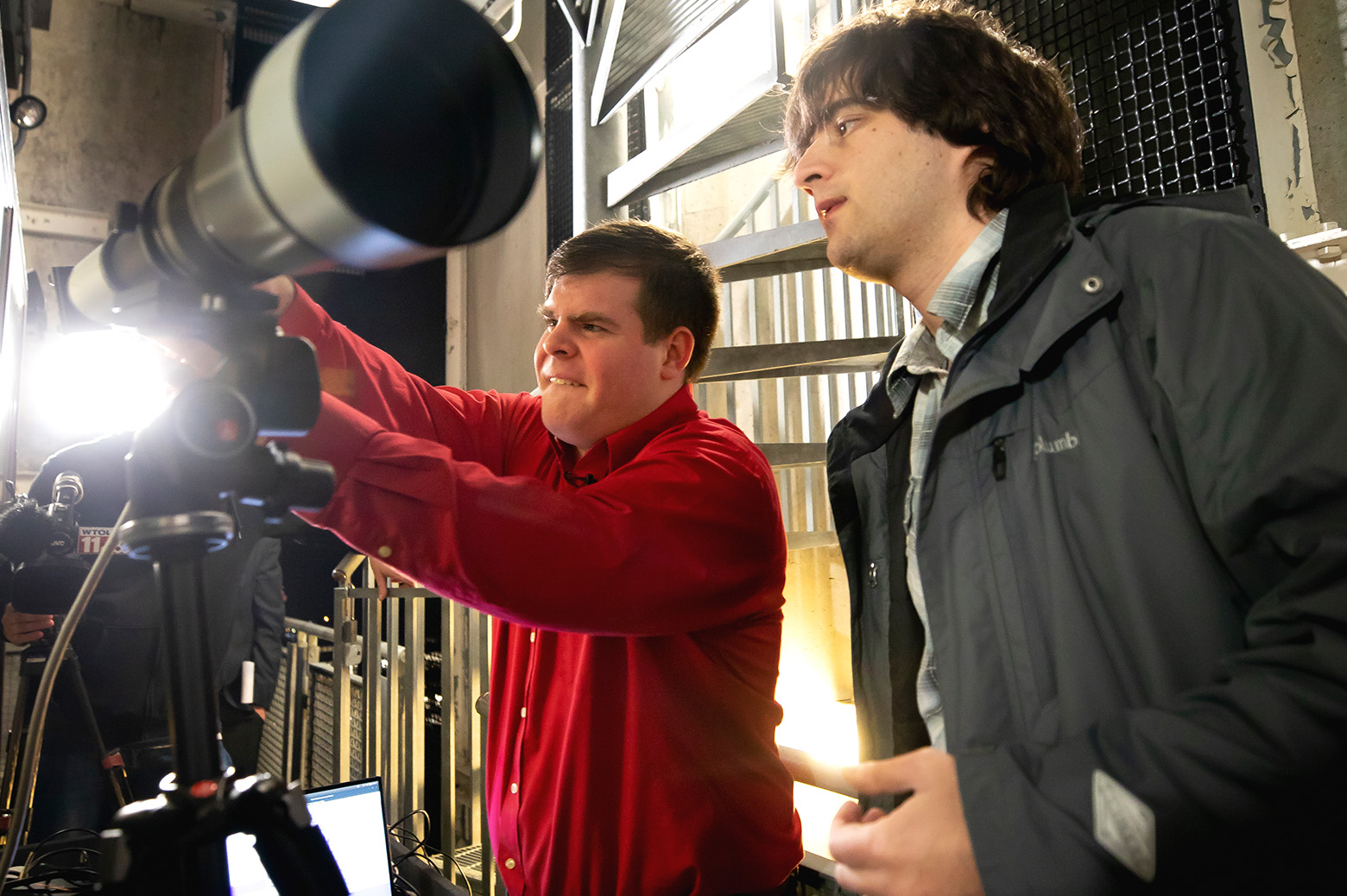

But even here there’s room for craftier work. Genkin, associate professor Kevin Fu, and research scientist Sara Rampazzi worked with a team led by PhD student Ben Cyr to inject unwanted commands into smart speakers and smartphones from 110 meters away. How did they do it? Not with sound, but with light.

In an as-yet unexplained quirk of physics, the team discovered that lasers could be encoded with messages that are interpreted by MEMS microphones as if they were sound. The team can record which command they want to inject, such as unlocking the front door, modulate the intensity of a laser with the audio signal, and then aim and fire.

As Genkin points out, this is a very dramatic example of a semantic gap.

“Microphones were designed to hear sound. Why can they hear light?”

The range of the attack is limited only by the intensity of an attacker’s laser and their line of sight. In one compelling demonstration, the team used a laser from 75 meters away, at a 21° downward angle, and through a glass window to force a Google Home to open a garage door; they could make the device say what time it was from 110 meters away.

As for why the microphones are “hearing” the light, that remains an open question for future investigations.

“It’s actually an unsolved problem in physics,” Fu says. “There are plenty of hypotheses, but there’s no conclusion yet. So this work goes well beyond computing.”

For now, human-focused approaches to mitigation like extra layers of authentication between sensitive commands and execution may be the safest bet at the consumer level.

“The cyber-physical space is the worst of both worlds.” Genkin says. “The devices are small, they’re critical, and they’re cheap. And you have a race to the bottom of who can make it cheaper.”

Enlarge

Enlarge

Getting a sense for things

Direction, temperature, pressure, chemical composition — an array of sensors now exist to make specific measurements, enabling our machines to become more interactive and capable.

But tricking this range of functions doesn’t actually require a range of hacks.

“Even though the sensor or attack modalities may be different,” explains Connor Bolton, a PhD student in Fu’s lab, “when you start looking at how each different component is exploited you end up seeing that the methods used are similar. When you start defining everything by the methods, you can see that a lot of attacks exploit the same kinds of principles.”

The common weak point is generally the sensor’s transducer, that component which converts physical signals into electrical. These are the components that take the physical world and all of its complexity most directly into account.

“Reality for sensors is only what they’re programmed to see,” Fu explains. “We’re effectively creating optical illusions by using radio waves and sound waves that fool the computer.”

Rampazzi tricked temperature sensors with electromagnetic waves of a certain frequency, for example. They’ve fooled other devices with sound, radio, and light waves. Sensors’ vulnerabilities are two-fold: many are unable to distinguish correct from incorrect inputs, and unable to weed out interference from the environment. This combo of unreliable devices communicating in unreliable environments proves to be a nightmare for security.

“The moment you can no longer trust your environment,” Fu says, “it becomes much harder to program a system. It’s like programming Satan’s computer at that point.”

Unfortunately, with such a broad base of application areas, perceptual imperfections leave innumerable systems with diverse weaknesses. Take the temperature sensor for example — several different types of analog temperature sensors have been found to be susceptible to the same method of adversarial control, including devices used in critical path applications such as hospital incubators and chemical manufacturing plants.

“From meters away, or even in an adjacent room, an attacker could trick the internal control system of an infant incubator to heat up or cool down the cabin to unsafe temperatures,” says Rampazzi.

The uniform characteristics of these attacks leave the field with both a trove of opportunities and unique challenges — almost everything is a viable hack to be fixed, but coming up with broad, systematic solutions is more difficult.

Bolton has focused his recent research on standardizing the scattered efforts in hardware security. The fairly new field draws expertise from assorted backgrounds, ranging from computer science to physics, leading to a number of redundant and mismatched solutions for overlapping problems. With a standardized model, the field can start to talk about their findings in a unified way that’s more suitable to tackling fundamental issues.

“I hope people start using the same language and coming together,” he continues, “and things become more about researching the methods versus a ‘new attack on a new sensor’ that really uses the same methods as others.”

The CPUs that showed their hand

You don’t need microphones or sensors to penetrate a computer’s physical boundaries; chips, too, face hardware hacks that manipulate physical phenomena.

Thanks to a group of researchers including Genkin, standard practices in computer architecture have become mired in controversy. From the start of his career in 2013 to the present, he’s helped to demonstrate a slew of so-called side-channel attacks. These rely on the non-traditional byproducts of code’s execution, like a computer’s power usage and the time it takes to access memory, to find pointers to off-limits data. In other words, they target places where computing crosses into the real world.

One such weak point his group has honed in on is the use of speculative execution. The result of a series of innovations that help a CPU process instructions faster, this practice involves predictively accessing data before it’s needed by a program.

Unfortunately, this technique circumvents standard steps meant to ensure sensitive data is accessed properly, and that data fetched predictively can be leaked. Two of the group’s most famous developments on this front are the Spectre and Meltdown exploits, which demonstrated how speculative execution could be abused to allow one application to steal data from another.

Because these attacks exploit weaknesses in the underlying hardware, defenses against them lie beyond the scope of traditional software safeguards. Solutions range from fundamentally changing how instructions are executed (which would mean a big hit for performance) to simply patching specific exploitation techniques through hardware and firmware as they become public. To this point, the latter approach has been preferred.

“The first attack variants were all using the processor’s cache to leak information, so then you started seeing research papers proposing a redesign of the cache to make it impossible to leak information that way,” says Ofir Weisse, a PhD alum who works on side-channel security. He says that this approach doesn’t address the fundamental problem, since the cache is only one of many ways to transmit a secret.

“The fundamental problem is that attackers can speculatively access secrets, and then can try to transmit them in numerous ways.”

In fact, Genkin’s work in 2020 identified that specific patches adopted to address the issue were themselves prone to exploitation – it turns out that by simply “flushing” data out of the cache, it could be just as readily leaked elsewhere. It was a matter of identifying the initial pattern, says Genkin, and then finding the weak points in system after system.

Presently Genkin and other researchers at U-M are still burrowing deeper into the rabbithole, in search of both more vulnerabilities and viable defenses. But the question of where it ends can only be answered by the decisions of industry.

“I think at the end of the day there are going to be provably secure ways to mitigate all of these attacks,” says Kevin Loughlin, a PhD student in this area, “but I think people will make a conscious security-performance tradeoff to leave themselves open to certain things that they view as not feasible.”

Enlarge

Enlarge

Green doesn’t always mean “go”

If what we’ve learned above is to be any indication, giving your car a pair of “eyes” is asking for trouble. A team at U-M were the first to show a very glaring reason why this is so, and it’s as intuitive as pointing a laser at a light sensor.

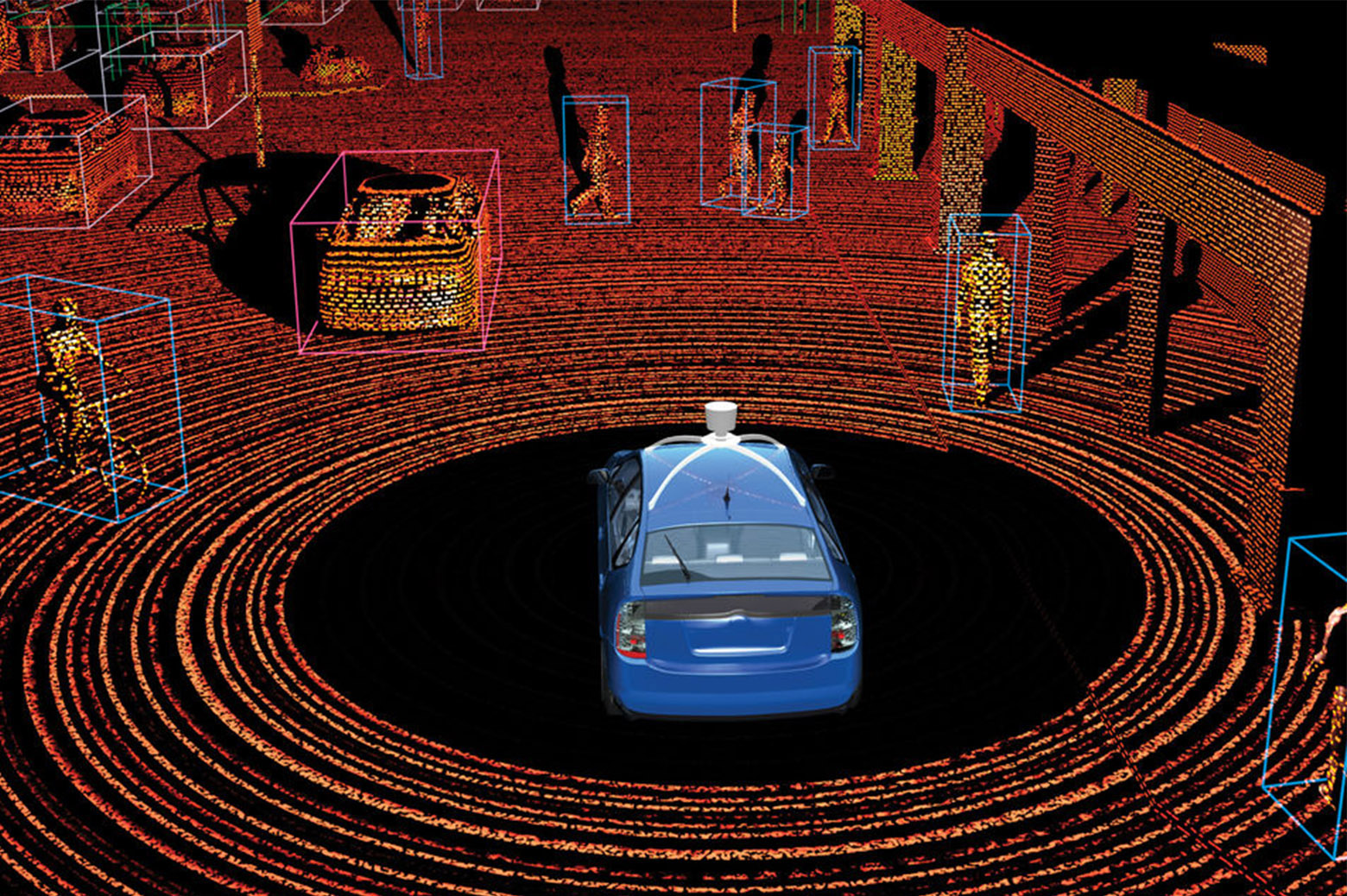

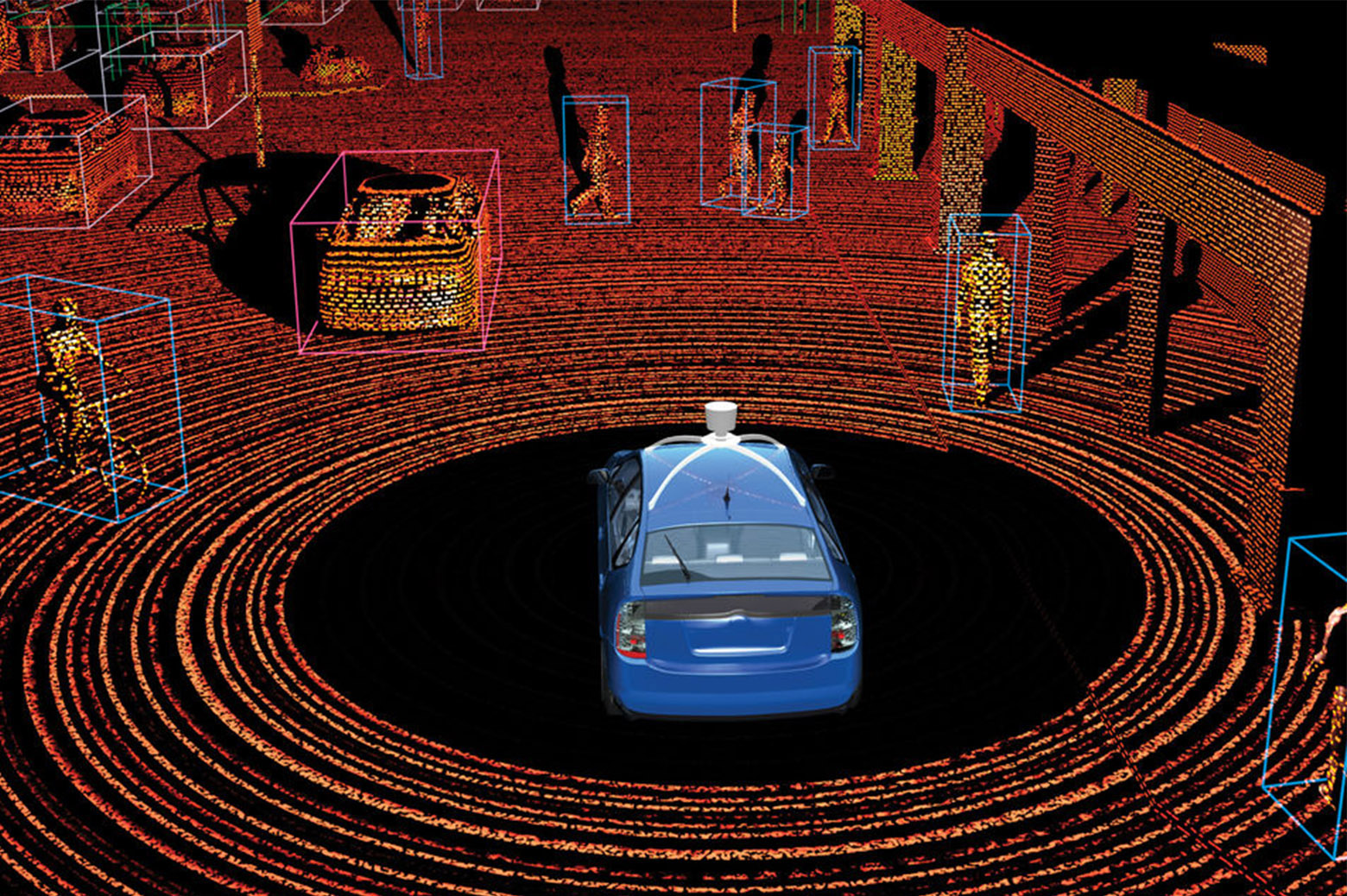

Self-driving cars rely on a suite of sensors and cameras for their vision, and all of it is additionally wrapped in a protective machine learning system. This system both makes sense of the heterogeneous inputs the vision package receives, and makes it more difficult for incorrect or malicious signals to have an effect on the car’s overall perception. To date, those malicious signals had been mainly targeted at the car’s radar and cameras.

A team led by PhD student Yulong Cao, professor Z. Morley Mao, Kevin Fu, and research scientist Sara Rampazzi broke new ground by designing an attack on vehicular LiDAR (Light Detection and Ranging) sensors. LiDAR calculates the distance to objects in its surroundings by emitting a light signal and measuring how long it takes to bounce off something and return to the sensor.

A LiDAR unit sends out tens of thousands of light signals per second, while the machine learning model uses the returned pulses to paint a fuller picture of the world around the vehicle.

“It is similar to how a bat uses echolocation to know where obstacles are at night,” says Cao.

The problem the team discovered is that these pulses can be spoofed, meaning that fake pulses can be inserted into the genuine signal. To fool the sensor, all an attacker needs to do is shine their own light at it.

The team used this technique to dramatic effect. By spoofing a fake object just in front of the car, they were able to both keep it stopped at a green light and trick it into abruptly slamming its brakes. The fake object could be crafted to meet the expectations of the machine learning model, effectively fooling the whole system. The laser could be set up at an intersection or placed on a vehicle driving in front of the target.

“Research into new types of security problems in autonomous driving systems is just beginning,” Cao says, “and we hope to uncover more possible problems before they can be exploited out on the road by bad actors.”

Hacking – more than just code

Enlarge

Enlarge

With computers that talk, phones that hear, cars that see, and a world of devices that feel, we’ve entered bold new territory for the realm of cyber defense.

“You can’t get away from physics,” says Fu. “Programmers are taught to constantly ignore the details. This is the underbelly of computing — those abstractions lead to security problems.”

And that only scratches the surface of the physical anomalies being discovered. While philosophers debate about the nature of human reality, computers are beginning to face an existential crisis of their own.

“It got to the point where it’s all rotten,” says Genkin. “It’s rotten to the core, and to get the rot out you have to start digging.”

MENU

MENU