How the Net Was Won: Michigan Built the Budding Internet

Enlarge

Enlarge

Douglas Van Houweling was collapsed in a chair, overjoyed—but daunted by the task ahead.

Van Houweling had received unofficial word a few weeks earlier that the National Science Foundation (NSF) had accepted his group’s proposal to upgrade an overloaded NSFNET backbone connecting the nation’s handful of supercomputing sites and nascent regional computer networks. But many details had still needed to be negotiated with the NSF before a public announcement could be made. Now, with those arrangements finally completed, that announcement, with some fanfare, would come the following day—on November 24, 1987.

The core of the team that Van Houweling and Eric Aupperle had knit together—and that for six long weeks had labored 20 hours a day, seven days a week, obsessing over every detail of its response to the NSF’s Request For Proposal—had gathered in Aupperle’s Ann Arbor home, and had stayed late into the night. It would only have these next few hours to exchange congratulations, to celebrate—and to start thinking about what would come next—before the real work would begin.

The Aupperle living room surged all evening with anticipation and speculation. As the night wound down, someone sitting on the floor next to the sofa said, “I think this is going to change the world.”

And yet they had no idea.

Enlarge

Enlarge

Michigan at the Forefront

Van Houweling was hired in late 1984 as the University of Michigan’s first vice-provost for information technology. Michigan Engineering Dean James Duderstadt and Associate Dean Daniel Atkins had fought to create the position, and to bring in Van Houweling, believing it critical to the University’s efforts to solidify and extend its already substantial computer standing.

The transformative power of computing would begin to gain credence by the 1960s, but the University of Michigan was at the forefront of the movement a full decade earlier. In 1953 its Michigan Digital Automatic Computer (MIDAC)—designed and built to help solve complex military problems—was only the sixth university-based high-speed electronic digital computer in the country, and the first in the Midwest. And in 1956 the legendary Arthur Burks—co-creator of the Electronic Numerical Integrator and Computer (ENIAC; considered the world’s first computer)—had established at Michigan one of the nation’s first computer science programs.

Michigan also became involved in the U.S. Department of Defense CONCOMP project, which focused on the CONversational use of COMPuters (hence the name), and by the mid-1960s the University of Michigan had established the Michigan Terminal System (MTS)—one of the world’s first time-sharing computer systems, and a pioneer in early forms of email, file-sharing and conferencing. In 1966 the University of Michigan (along with Michigan State University and Wayne State University) also created the Michigan Educational Research Information Triad (MERIT; now referred to as Merit), which was funded by the NSF and the State of Michigan to connect all three of those universities’ mainframe computers.

And it was Merit—with Van Houweling as its chairman—that would be critical in securing this latest NSF grant to rescue the sputtering NSFNET.

Enlarge

Enlarge

The Slow Spread of Networked Computing

As computers grew in importance among academics, computer scientists and private and government researchers, efforts intensified to link them to share data among various locations. The Department of Defense Advanced Research Projects Agency (DARPA), which was leading this inquiry, had decided by the mid-1960s that large-scale simultaneous sharing of single communication links among more than one machine over long distances could be accomplished more efficiently using a new packet switching method of electronic connecting rather than the established circuit-switching method. In 1969 DARPA established the first major packet switching computer network, called the ARPANET—a network to connect researchers at various locations—and for several years it would test these communications links among a few private contractors and select U.S. universities.

Michigan was a contemporary of the ARPANET through CONCOMP and Merit. By the mid-1970s Merit’s network had added Western Michigan University (and soon would include every one of Michigan’s state universities). And as one of the earliest regional networks, Merit was among the first to support the ARPANET’s agreements on exchange definitions—the so-called Transmission Control Protocol/Internet Protocol (or TCP/IP) protocols—along with its own.

Enlarge

Enlarge

The ARPANET slowly proved an extremely useful networking tool for the still rather limited and relatively small science research, engineering and academic communities. By 1981 the ARPANET’s tentative successes would inspire the Computer Science Network (CSNET)—an NSF-supported network created to connect U.S. academic computer science and research institutions unable to connect to the ARPANET due to funding or other limitations. But as the ARPANET/CSNET network was growing, concerns nonetheless were building among scientists and academics that the United States had been falling behind the rest of the world—and in particular Japan—in the area of supercomputing.

To address the perceived supercomputing gap, the NSF purchased access to the several then-existing research laboratory and university-based supercomputing centers, and it initiated a competition to establish four more U.S. supercomputer centers. Michigan was among those that had prepared a bid—which it already had submitted by the time Van Houweling arrived on campus. But Van Houweling would quickly learn that Michigan’s proposal, though among the top-rated technically, was not going to succeed—if for no other reason than because it contemplated use of a supercomputer built in Japan. Cornell, Illinois, Princeton, and UC-San Diego were awarded the first four sites (and a Carnegie Mellon-University of Pittsburgh site was added later).

With a burgeoning group of supercomputing centers now in place—and a growing number of NSF-supported regional and local academic networks now operating across the country—the NSF needed to develop a better, faster network to connect them. Its NSFNET, operational in 1986, was at first modestly effective. But an immediate surge in traffic quickly swamped its existing infrastructure and frustrated its users.

By 1987 the NSF was soliciting bids for an NSFNET upgrade. The Merit team, and Van Houweling—who had been discussing precisely this kind of a network with the NSF for several years—were ready to pounce.

The NSFNET Takes Off

Though the NSF and the State of Michigan funded Merit, it was and still is hosted by the University of Michigan, and all of its employees are University employees. Michigan Engineering professor Bertram Herzog was named Merit’s first director in 1966, and Eric Aupperle—who was now Merit’s president, and principal investigator for the NSFNET bid proposal—had been Herzog’s first hire as senior engineer.

Enlarge

Enlarge

The NSF had encouraged NSFNET upgrade respondents to involve members of the private sector, but nobody needed to tell that to Van Houweling. As Merit’s chairman, Van Houweling already had been cajoling his well-established contacts at IBM, who in turn convinced MCI—an upstart telecommunications company looking to make a name for itself in the wake of the breakup of the AT&T monopoly—to join the fold. IBM committed to providing hardware and software, as well as network management, while MCI would provide transmission circuits for the NSFNET backbone at reduced rates. With these commitments in place, Gov. James Blanchard agreed to contribute $1 million per year over five years from the state’s Michigan Strategic Fund.

And the bid was won.

Now the team would need to build an extensive and upgraded infrastructure, using newer and more sophisticated networking hardware of a type never used before—and it would have to do it fast.

The first generation NSFNET had employed a University of Delaware faculty-produced router—the device that forwards data among networks. That original router was nicknamed the Fuzzball, and ran at 56 kilobits per second. But the next generation was supposed to run at 1.5 megabits per second—or nearly 30 times faster.

“A whole different category,” says Van Houweling, “and nothing like that existed. Today, you can buy a router for your house for about $50 to $100. But there were no routers to speak of then. You could buy one—for about a half million. But IBM committed to build it and write the software—for free!”

MCI’s Richard Liebhaber later recalled, during a 2007 NSFNET 20th anniversary celebration, how quickly things were moving—and how much more there was to learn. “All this baloney about, ‘We knew what we were doing,’” said Liebhaber. “When we committed to this, we didn’t have anything. We had ideas, but that was about it.”

But somehow, it all worked.

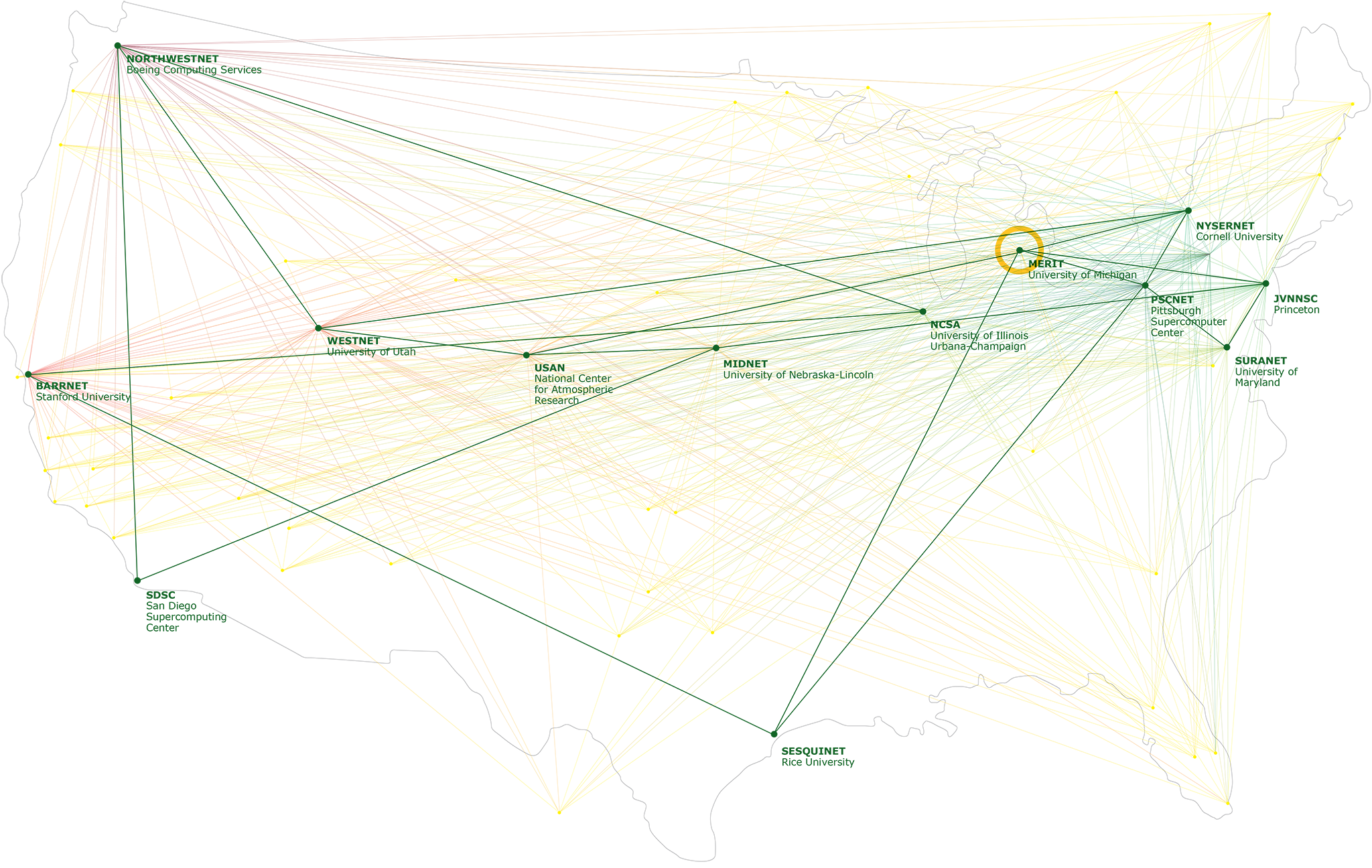

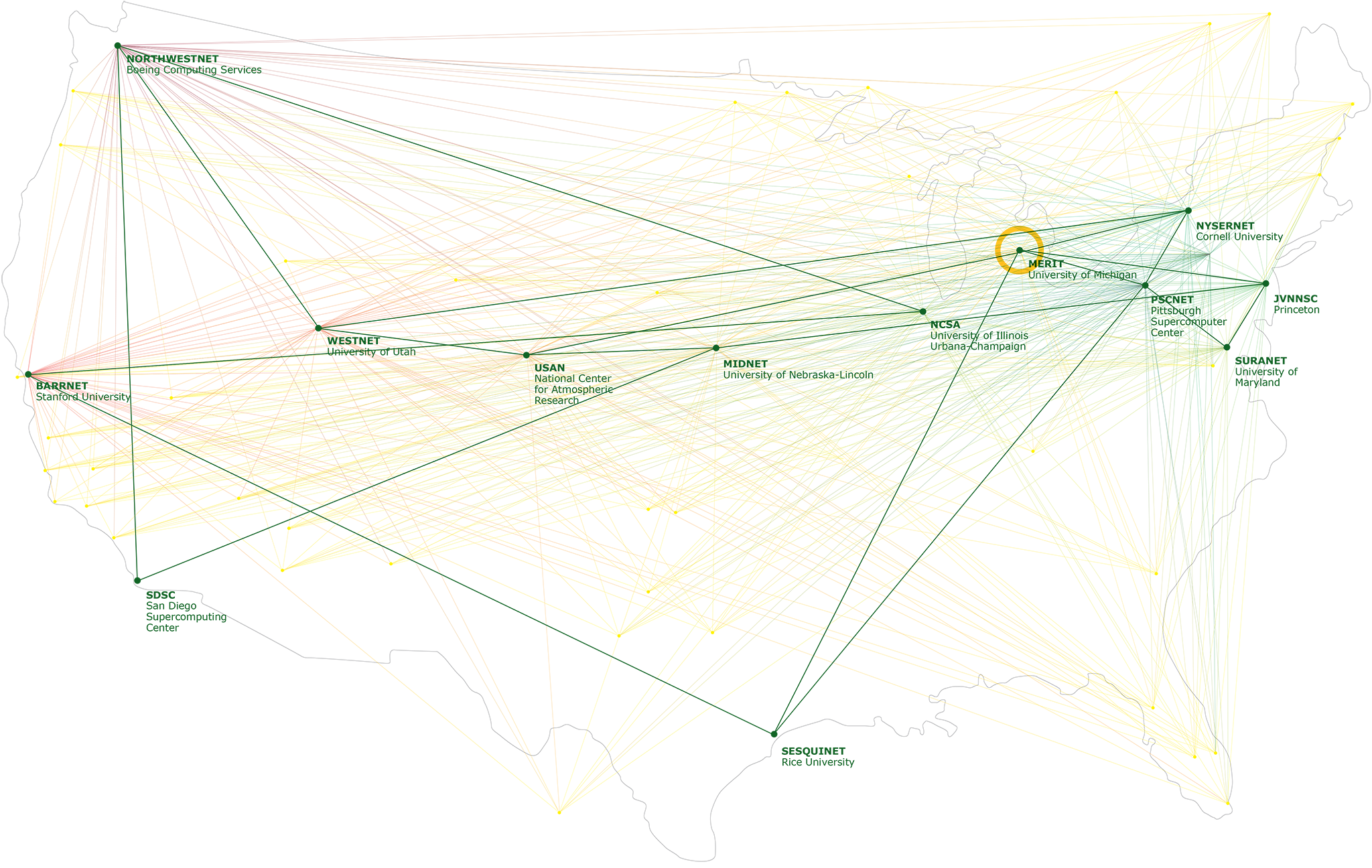

Merit committed to making the new backbone operational by August 1988, and it accomplished that feat by July of that year—just eight months after the award. The newer, faster NSFNET connected 13 regional networks and supercomputer centers, representing more than 170 constituent campus networks. This upgraded network of networks experienced an immediate surge in demand of 10 percent the first month—a growth rate that would hold firm year after year.

Enlarge

Enlarge

“At first we thought it was just pent-up demand, and it would level off,” says Van Houweling. “But no!”

Merit had exceeded its own early expectations—though Aupperle modestly attributed that to “the incredible interest in networks by the broader academic communities” rather than to the new network’s speed and reliability. But the results were indisputable. With an operations center that operated nonstop, Merit’s staff expanded from 30 to 65 and overflowed into a series of trailers behind the North Campus computing center.

Craig Labovitz was a newly hired Merit engineer who had abandoned his PhD studies in artificial intelligence at Michigan because he was so fascinated by his at-first-temporary NSFNET work assignment. “Most people today don’t know that the heart of the Internet was once on North Campus,” says Labovitz. “It was where the operations and on-call center was, and where all the planning and the engineering took place.” Labovitz—who put to productive use the expertise he gleaned during his NSFNET tenure—now operates DeepField, an Ann Arbor-based cloud and network infrastructure management company.

The NSFNET soon proved to be the fastest and most reliable network ever. The new NSFNET technology quickly replaced the Fuzzball. The ARPANET was phased out in 1990. And by 1991 the CSNET wasn’t needed anymore, either, because all the computer scientists were connecting to the NSFNET. As the first large-scale, packet switched backbone network infrastructure in the United States, almost all traffic from abroad was traversing the NSFNET as well, and its most fundamental achievement—construction of a high-speed network service that evolved to T1 speeds (1.5 megabits per seconds) and later to T3 speeds (45 megabits per second)—would essentially cover the world.

“Throughout this whole period, it was all about the need to support university research that drove this project,” says Van Houweling. “Researchers needed to have access to these supercomputing facilities, and the way to do it was to provide them with this network. Nobody had the notion that we were building the communications infrastructure of the future.”

But that’s the way it turned out.

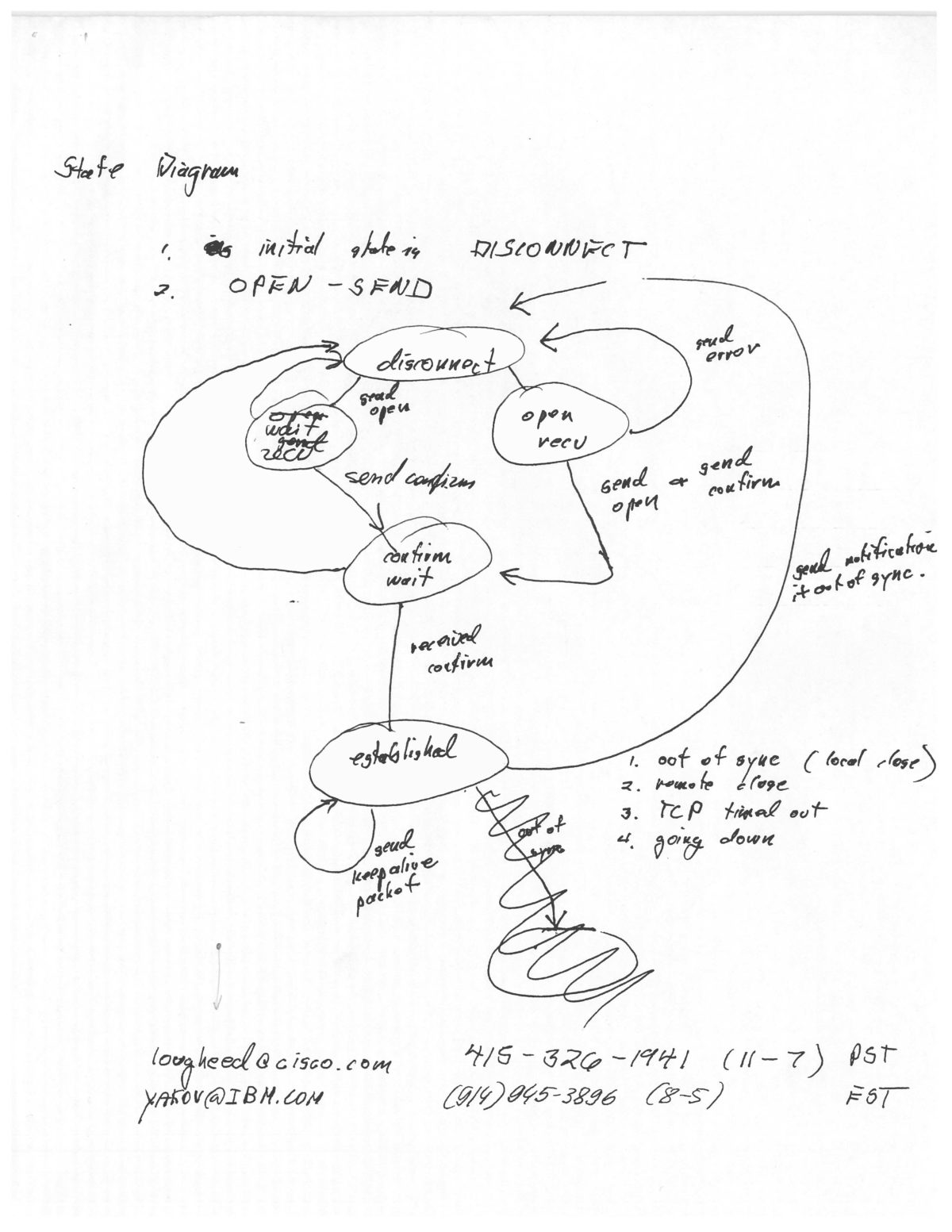

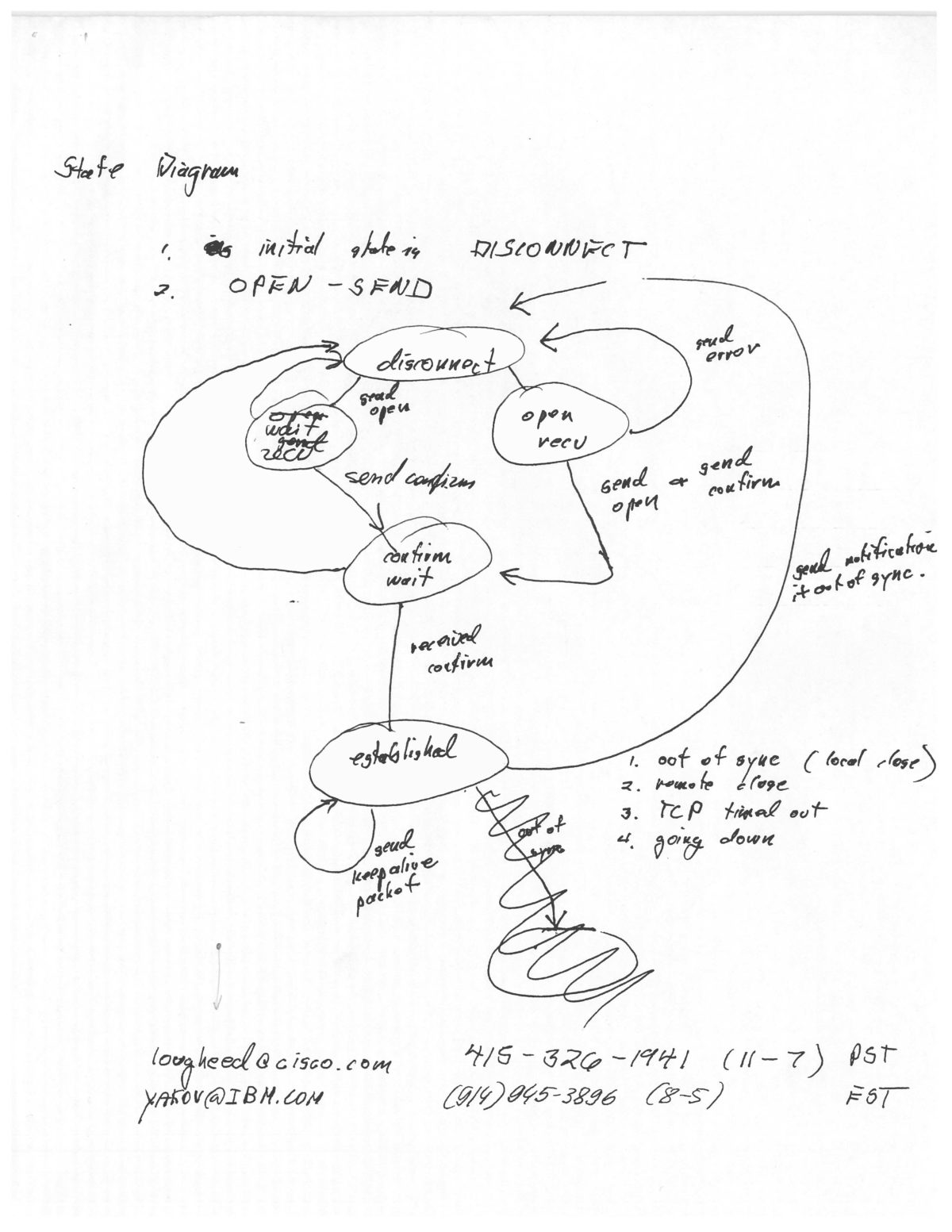

The Protocol Wars

To the extent that any one or more individuals are said to have “invented” the Internet, credit generally goes to American engineers Vinton Cerf and Robert Kahn. Along with their team at DARPA in the mid-1960s, Cerf and Kahn developed (based on concepts created by Louis Pouzin for the French CYCLADES project) and later implemented the TCP/IP protocols for the ARPANET. The TCP/IP protocols were also referred to as “open” protocols—and later, simply, as the Internet protocols. (Cerf also may have been the first to refer to a connected computer network as an “internet”—though the “Internet” would not fully come to the attention of the general public for another two decades.)

The significance of the NSFNET’s success was not just that it scaled readily and well, but that it did so using the open protocols during a time of stress and transition.

To the extent that any one or more individuals are said to have “invented” the Internet, credit generally goes to American engineers Vinton Cerf and Robert Kahn.

The open protocols had proved popular among computer scientists accustomed to using the ARPANET and the CSNET, but they still were relatively new and untested. As the NSF considered the NSFNET’s standards, there remained deep skepticism—and perhaps no small amount of self-interest—among commercial providers that the open protocols could effectively scale. Every interested corporate enterprise was pressing for its own protocols.

“There was a race underway between the commercial interests trying to propagate their proprietary protocols, and the open protocols from the DARPA work,” says Daniel Atkins, now professor emeritus of electrical engineering and computer science at Michigan Engineering and a professor emeritus of information at the School of Information. “AT&T had intended to be the provider of the Internet.”

“Once the NSF made the [NSFNET upgrade] award, AT&T’s lobbyists stormed the NSF offices and tried to persuade them that this was a terrible idea,” says Van Houweling.

The NSFNET’s immediate challenge, therefore, was to avoid a flameout, explains Van Houweling. Getting overrun would have given this open model “a black eye”—enabling the telecommunications and computing companies to “rush in and say, ‘See, this doesn’t work. We need to go back to the old system where each of us manages our own network.’”

But as Aupperle noted, “those networks weren’t talking to each other.” Proprietary protocols installed in the products of Digital Equipment Corporation, IBM, and other computer manufacturers at that time were hierarchical, closed systems. Their models were analogous to the telephone model, with very little intelligence at the devices, and all decisions and intelligence residing at the center—whereas the Internet protocols have an open, distributed nature. The power is with the end-user—not the provider—with the intelligence at the edges, within each machine.

“AT&T’s model was top-down management and control. They wouldn’t have done what the NSFNET did,” says Van Houweling. Unlike their proprietary counterparts, open protocols weren’t owned by anyone—which meant that no one was charging fees or royalties. And that anyone could use them.

“It created what we would now call a viral effect, where everybody wanted it. It met the need and swamped the competition.”

As it happened, “adoption of the open protocols of the NSFNET went up exponentially, and it created what we would now call a viral effect, where everybody wanted it, including [eventually] the commercial world,” says Atkins. “It met the need and swamped the competition.”

But the battle over proprietary standards would not be won easily or quickly. Before the open protocols could definitively prove their feasibility, a prominent competing effort in Europe to build and standardize a different set of proprietary network protocols—the Open Systems Interconnect (OSI)—was continuing to garner support not just from the telecommunications industry but also from the U.S. government. This debate wouldn’t fully and finally end for at least a decade—or until the end of the 1990s.

Until the upgraded NSFNET started to gain traction, “everything had been proprietary. Everything had been in stovepipes,” says Van Houweling. “There had never been a network that had the ability to not only scale but to also connect pretty much everything.”

“It was the first time in the history of computing that all computers spoke the same language,” recalled IBM’s Allan Weis at the NSFNET 20th anniversary. “If [a manufacturer] wanted to sell to universities or to a research institution that talked to a university, [it] had to have TCP/IP on the computer.”

Proprietary protocols “had a control point,” Weis added. “They were controlled by somebody; owned by somebody. TCP/IP was beautiful in that you could have thousands of autonomous networks that no one owned, no one controlled, just interconnecting and exchanging traffic.”

And it was working.

Enlarge

Enlarge

The Bumpy Road to Commerce

But continued growth would bring change, and change would bring controversy.

“When the NSFNET was turned on, there was an explosion of traffic, and it never turned off,” says Van Houweling. Merit had a wealth of experience, and along with MCI and IBM it had for more than two years exceeded all expectations. But Merit was a nonprofit organization created as a state-based enterprise. To stay ahead of the traffic the NSFNET would have to upgrade again—from T1 to T3. No one had ever built a T3 network before.

“To do this, you had to have an organization that was technically very strong, and was run with the vigor of industry,” reasoned Weis. This would require more funding, which was not likely to come from the NSF.

In September 1990, the NSFNET team announced the creation of a new, independent nonprofit corporation—Advanced Network & Services, Inc. (ANS), with Van Houweling as its chairman. With $3 million investments from MCI, IBM and Northern Telecom, ANS subcontracted the network operation from Merit, and the new T3 backbone service was online by late 1991. The T3 service represented a 30-fold increase in bandwidth—and took twice as long as the T1 network to complete.

At this point the NSFNET still was servicing only the scientific community. Once the T3 network was installed, however—and some early bumps smoothed over—commercial entities were seeking access as well. ANS created a for-profit subsidiary to enable commercial traffic, but charged commercial users rates in excess of its costs so that the surplus could be used for infrastructure and other network improvements.

But several controversies soon arose. Regional networks desired commercial entities as customers for the same reasons that ANS did, but felt constrained by the NSF’s Acceptable Use Policy, which prohibited purely commercial traffic (i.e., not directly supporting research and education) from being conveyed over the NSFNET backbone.

Even though non-academic organizations willing to pay commercial prices were largely being denied NSFNET access, the research and education community nonetheless raised concerns about how commercialization would affect the price and quality of its own connections. And on yet another front, commercial entities in the fledgling Internet Service Provider market complained that the NSF was unfairly competing with them through its ongoing financial support of the NSFNET.

Inquiries into these matters—including Congressional hearings and an internal report by the inspector general of the NSF—ultimately resulted in federal legislation in 1992 that somewhat expanded the extent to which commercial traffic was allowed on the NSFNET.

But the NSF always understood that the network would have to be supported by commerce if it were going to last. It never intended to run the NSFNET indefinitely. Thus a process soon commenced whereby regional networks became, or were purchased by, commercial providers. In 1994 the core of ANS was sold to America Online (now AOL), and in 1995 the NSF decided to decommission the NSFNET backbone.

And the NSFNET was history.

Enlarge

Enlarge

“When we finally turned it over [to the commercial providers], the Internet hiccupped for about a year,” according to Weis, because the corporate entities weren’t as knowledgeable or as prepared as they needed to be. IBM—which had several years’ head start on the competition in building capable Internet routers—didn’t pursue that business because others at IBM (outside of its research division, of which Weis was a part) still thought proprietary networks would ultimately win the protocol wars—even as the NSFNET was essentially becoming the commercial Internet. Cisco stepped into the breach, and following this initially rocky period it developed effective Internet router solutions—and has dominated the field ever since.

“Whenever there are periods of transition … by definition they involve change and disruption,” says Labovitz. “So initially it was definitely bumpy. Lots of prominent people were predicting the collapse of the Internet.

“In hindsight, we ended up in a very successful place.”

But What If It Had Failed?

Enlarge

Enlarge

Van Houweling is fond of saying the Internet could only have been invented at a university because academics comprise “the only community that understands that great things can happen when no one’s in charge.”

“The communications companies that resisted did so on the basis that there was no control,” Van Houweling says. “From its own historical perspective, this looked like pure chaos—and unmanageable.”

Labovitz agrees. “It was an era of great collaboration because it was a non-commercial effort. You were pulling universities together, so there were greater levels of trust than there might have been among commercial parties.”

So what would the Internet look like today if it had gone AT&T’s way? One possible scenario is that the various commercial providers may well have created a network in silos, with tiered payments depending on the type of content, the content creator and the intended consumer—without the unlimited information sharing.

“It’s hard to predict precisely what would have happened,” Atkins admits, but instead of being “open and democratizing,” it might have been “a balkanized world,” with “a much more closed environment, segmented among telecommunications companies.”

“Now, you just have to register, and you can get an IP address and you can put up a server and be off and going,” Atkins says. “If AT&T were running it, it would have to set it up for you. It would have control over what you could send, what rates you could charge.”

“In the early [1980s pre-NSFNET] CompuServe/AOL days, you could only get the information they provided in their walled gardens,” says Van Houweling. “The amount of information you could access depended on the agreements CompuServe or AOL had with their various information providers.”

Van Houweling suggests that most likely the OSI protocols would have prevailed. But it wasn’t going to be easy to “knit together” all the telecommunications companies. OSI had been developing as a series of political compromises, with variations provided for each provider. And commercial carriers had remained fundamentally opposed to the concept that anyone, anywhere could just hook up to the network. It didn’t fit their traditional revenue models.

“I do think it is inexorably true that eventually we would have had a network that tied everything together. But I don’t think it would have been similar [to what we have now],” says Van Houweling.

How might we have progressed from the old “walled gardens” to what we have today—which is that no matter which computer you sit down to, you have access to the world?

“I frankly don’t know if we would have gotten there,” Van Houweling says. “It might have been the end of the Internet.”

MENU

MENU