AI for accessibility

Technology has become an increasingly integral part of our sensory experience of the world, helping us interact with our environments and engage our senses in unprecedented ways.

For individuals with disabilities, being in the world is no less rich and vibrant, but technology is not always designed with their unique experiences in mind. Most products are developed with the assumption that their users do not have disabilities, creating barriers to access for wide swathes of the population.

“People with disabilities experience the world very differently than those without disabilities,” said Dhruv Jain, assistant professor of computer science and engineering (CSE) at the University of Michigan, long-time proponent of accessible technology, and member of the deaf or hard-of-hearing (DHH) community. “Unfortunately, this is not reflected in mainstream design principles. Most new technologies and user interfaces are made for the average, non-disabled person.”

Faculty in the Human-Centered Computing Lab in CSE, like Jain, are not satisfied with this status quo. Informed by their personal encounters with disability, whether through their own lived experience or that of friends and mentors, Jain and fellow CSE professor Anhong Guo want to see a tech world in which accessibility is at the forefront of UX/UI design.

“What would happen if we gave people with disabilities a seat at the table in the design of new technologies?” questioned Jain.

The answer, according to Guo, is a better user experience for everyone. “One thing we find over and over in our research is that incorporating accessible principles in tech design benefits all users, not just those with disabilities.”

Empowering individuals who are blind or have low vision

Guo’s research focuses on bridging the gap between the digital world and the needs of the blind and low-vision community, which comprises more than 300 million individuals worldwide. With an overarching aim to enable this community to interact with digital media and the physical world more effectively, his projects span a wide array of innovative solutions powered by artificial intelligence (AI).

One of Guo’s recent projects, EditScribe, aims to make photo editing accessible to people who are blind or have low vision. “We are developing tools where the user can use natural language as input and get outputs from the system,” he explained. For instance, a blind user could ask EditScribe to “remove the person” or “change the color of the cat” in a photo, and the system would edit it accordingly, all while providing feedback using detailed image descriptions.

This is achieved by using a combination of the latest AI vision language models (VLMs) and large language models (LLMs). Specifically, EditScribe makes object-level image editing actions accessible using natural language verification loops powered by large multimodal models. The user comprehends the image content through initial general and object descriptions, specifies edit actions using open-ended prompts, and receives four types of verification feedback including a summary of visual changes, AI judgment, and updated descriptions.

By opening up the world of photo editing, EditScribe allows users to tap into their creativity and edit visual media independently, transforming their experience from passive consumers of content to active creators. This work was recently presented at the ASSETS 2024 conference.

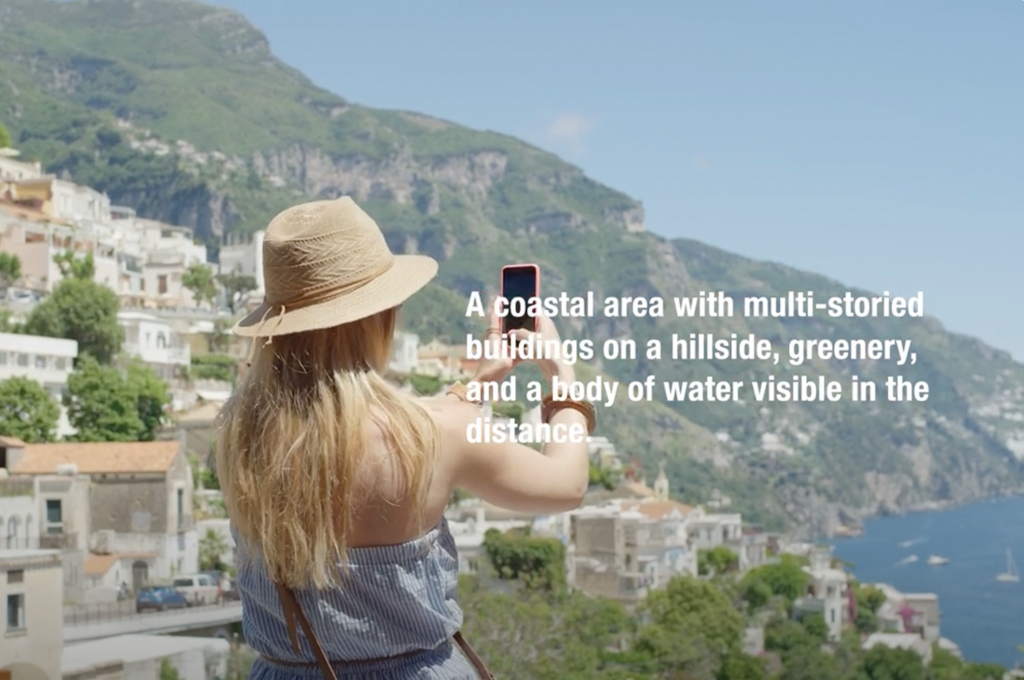

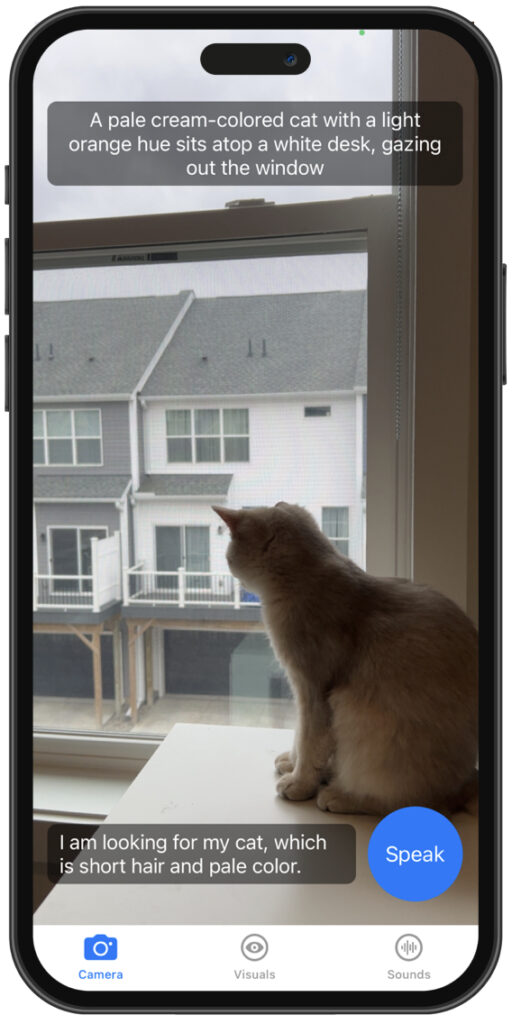

Expanding on these ideas, Guo has developed a program called WorldScribe, extending similar principles to 3D scenes in real-time rather than to still images. WorldScribe, which generates live visual descriptions to assist people who are blind or have low vision, uses generative AI language models to interpret camera images and produce text and audio descriptions in real time. This helps users become aware of their surroundings more quickly and better navigate a variety of everyday environments, from busy city streets to quiet indoor spaces. For example, a user could ask for assistance in locating their cat, explore the room with their phone, and receive a response such as, “A pale cream-colored cat with a light orange hue sits atop a white desk, gazing out the window.”

WorldScribe won a Best Paper Award at UIST 2024, where it was recognized for significantly advancing assistive technologies. Furthermore, Guo’s work on WorldScribe recently received a $75,000 Google Academic Research Award, aimed at further honing the program’s ability to leverage real-world contextual knowledge to describe environments to users in real time.

Another key project led by Guo is ProgramAlly, which empowers blind users to tailor solutions to their specific needs through end-user programming. Whether it’s finding the expiration date on grocery items or sorting mail, users can create their own programs to interact with text and other visual information more effectively. This work was recently published and presented at UIST 2024.

In all of his projects, Guo works to closely engage and collaborate with community members who are blind or have low vision. “We involve people with disabilities at all stages of our research,” he said. “Their feedback is crucial, often leading to significant changes in our approach and solutions.”

Through these innovations, Guo hopes to empower people who are blind or have low vision to navigate everyday environments and technologies more easily, not only to foster a more inclusive, accessible world but also because doing so improves the user experience for everyone.

“Accessible technology is better technology,” he said. “It makes sense that developments made with all users in mind improve design across the board.”

Enhancing environmental awareness for the DHH community

Dhruv Jain applies a similar philosophy in his own research on accessibility, which aims to enhance environmental awareness for the DHH community. One of his prominent projects is SoundWatch, a smartwatch app that helps deaf individuals perceive critical sounds in their environment, like doorbells or microwave beeps. “We want to make sounds more perceivable and understandable,” said Jain.

Building on the success of SoundWatch, Jain’s team is now working on integrating contextual awareness into these systems. “The same sound might mean different things in different contexts,” he explained. In this work, he seeks to gain a holistic understanding of how contextual information can significantly change sound interpretation, with the aim of designing better assistive tools for DHH individuals.

A recent example of this is SoundModVR, a toolkit Jain and his team developed that enables sound modifications in virtual reality (VR) to support DHH users. Recently demoed at UIST 2024 and presented at ASSETS 2024, SoundModVR includes 18 AI-enabled VR sound modification tools enabling users to prioritize sounds, modify sound parameters, provide spatial assistance, and add additional sounds.

SoundModVR is designed to be versatile and user-friendly, allowing for real-time adjustments to the VR auditory environment. Jain’s evaluation of the program with DHH users revealed that the toolkit significantly enhances the VR experience, and his team is currently working to equip the tool with more customization options to further reduce cognitive load.

In addition to SoundModVR, Jain’s recent research endeavors have been bolstered by a $75,000 grant from the Google Academic Research Awards program. This grant will support his development of a comprehensive AI system that provides nuanced auditory scene information to DHH users. The project aims to use state-of-the-art machine learning models to provide detailed auditory cues, such as sources of multiple sounds, their distances, importance compared to other sounds, or the temporal sequence of sound events, thus significantly improving users’ overall awareness and safety.

“We hope to convey more holistic semantic auditory information about the surroundings, which can provide DHH individuals with actionable cues and support them in everyday tasks,” Jain explained. “For example, it’s not enough to know that a washer is running. It’s also important for DHH people to know whether it has just started spinning or if it’s ending its cycle and ready for the clothes to be taken out. Conveying the state of the appliance is crucial.”

Jain’s other ongoing initiatives include collaborating with the medical community to improve accessible communication in healthcare settings, as well as exploring how wearable technology like headphones can be made more accessible through contextual audio delivery.

“My goal is to bridge the gap between technology and the real-time needs of the DHH community,” he said. “By making sounds more perceivable and understandable, we can create tools that enhance the experience of many.”

Outside of his research, Jain is a strong proponent of accessibility at U-M and in the field of computer science more broadly. He recently completed a term as the first-ever VP for Accessibility with the ACM Special Interest Group on Computer-Human Interaction (SIGCHI), where he oversaw the group’s efforts to make its conferences and publications more accessible to people with a variety of sensory, motor, and language barriers and other disabilities.

“Making technology accessible creates a more equitable and enriched digital world for everyone,” said Jain. “We are working toward a future where technology enhances the lived experiences of all individuals, regardless of their abilities.”

The Michigan difference

The work being done in CSE represents the cutting edge of accessible technology research. Innovative solutions like WorldScribe and SoundModVR not only address critical gaps in current assistive technologies but also set new standards for what is possible in this realm. Guo and Jain’s efforts are contributing significantly to a broader understanding of and appreciation for accessible technology design, opening up new avenues of improvement so that tech can better meet the diverse needs of all users.

Moreover, by involving individuals with disabilities from the outset and throughout the development process, these researchers are ensuring that their innovations are truly beneficial. “When we center the voices and experiences of people with disabilities in our research, we create designs that are more intuitive, effective, and inclusive,” said Jain.

“Designing for people with disabilities not only benefits them but also drives better technology for everyone,” says Jain.

The University of Michigan’s commitment to accessibility goes beyond the Human-Centered Computing Lab, with faculty across the university pushing the boundaries of what accessible technology can achieve. This includes Steve Oney, a professor in the School of Information and CSE, whose research has contributed to improving web accessibility and programming tools for blind and low-vision individuals. Another faculty member in the School of Information, Robin Brewer, works to build digital systems, from voice assistants to visual access tools, to support disabled people, older adults, and caregivers.

They are joined by faculty member Sile O’Modhrain, a professor in both the School of Music, Theatre & Dance and the School of Information and a blind person herself, whose research focuses on interfaces incorporating haptic and auditory feedback, and their application in both enabling musical expression and improving accessibility. Venkatesh Potluri, a recently hired faculty member in the School of Information and member of the blind community, works to examine and address accessibility barriers for developers who are blind or low-vision, particularly in the areas of interface design, physical computing, and data science.

With a robust network of campus centers such as the Center for Disability Health and Wellness (CDHW), MDisability, and the Kresge Hearing Research Institute, U-M provides a rich, collaborative environment for accessibility research. These centers conduct in-depth studies of disabilities and supply valuable data and insights essential for developing assistive technologies and other initiatives. As a result, U-M is considered one of the top institutions globally for accessibility research, particularly in the area of accessible computing.

The work of CSE researchers, together with their colleagues at the School of Information and across the university, is harnessing the power of AI to usher in a new era of accessible tech, while also positioning U-M as a global leader in this space.

Toward an inclusive future for everyone

There is more than just an ethical and humanitarian reason to make tech accessible – there is also a business case to be made. Inclusivity drives innovation and opens up new markets, making it a win-win for everyone involved.

“Designing for people with disabilities not only benefits them but also drives better technology for everyone,” said Jain. He pointed out that features like subtitles and noise-canceling headphones, which were initially designed with specific accessibility needs in mind, are now widely used by the general population. “People with disabilities are often early adopters of many modern technologies such as emails, earphones, voice captioning, audiobooks, and a variety of input and output devices,” he said. “Working in accessibility provides us a glimpse into the future.”

Many tech companies are beginning to understand the importance and the multi-faceted benefits of accessibility. Microsoft, for example, has invested heavily in accessibility features across its products, such as the customizable Xbox Adaptive Controller and the Windows Narrator screen reader. Similarly, Apple has made efforts to update its products with accessible features like the AssistiveTouch, a touch-based interface for those with motor impairments, and Sound Actions, which allow individuals with severe disabilities to operate devices using custom voice commands – a feature to which Jain contributed.

Such advances are made possible by the pioneering efforts of researchers at the University of Michigan, who are bringing us closer than ever to achieving a more inclusive and user-friendly future. Their work is setting a new standard for accessibility in tech, demonstrating that when we design with all users in mind, everyone benefits.

MENU

MENU