Ten year impact award for landmark early work on video sentiment analysis

Research by Janice M. Jenkins Professor of Computer Science Rada Mihalcea was recognized by the ACM International Conference on Multimodal Interaction with a Ten Year Technical Impact Award. Her 2011 paper “Towards Multimodal Sentiment Analysis: Harvesting Opinions on the Web” was co-authored with Carnegie Mellon Associate Professor LP Morency and former University of Southern California graduate student Payal Doshi.

“The internet is becoming an almost infinite source of information,” the authors wrote at the time of the paper’s publication. “One crucial challenge for the coming decade is to be able to harvest relevant information from this constant flow of multimodal data.”

Their research motivation was more than justified by the intervening ten years. At the time, the appearance of figures like 10,000 videos being uploaded daily to YouTube was still very new. Now, the number of hours of video being uploaded to YouTube every minute has increased more than tenfold since 2011, and it’s slowly being challenged by TikTok’s one billion active users.

The paper sought to address the task of multimodal sentiment analysis, and the authors conducted proof-of-concept experiments that demonstrated that it was possible to devise a joint model that integrates visual, audio, and textual features from online videos.

Their paper made three key contributions:

- It was the first to address the task of sentiment analysis in three modes simultaneously, showing that it was a feasible task.

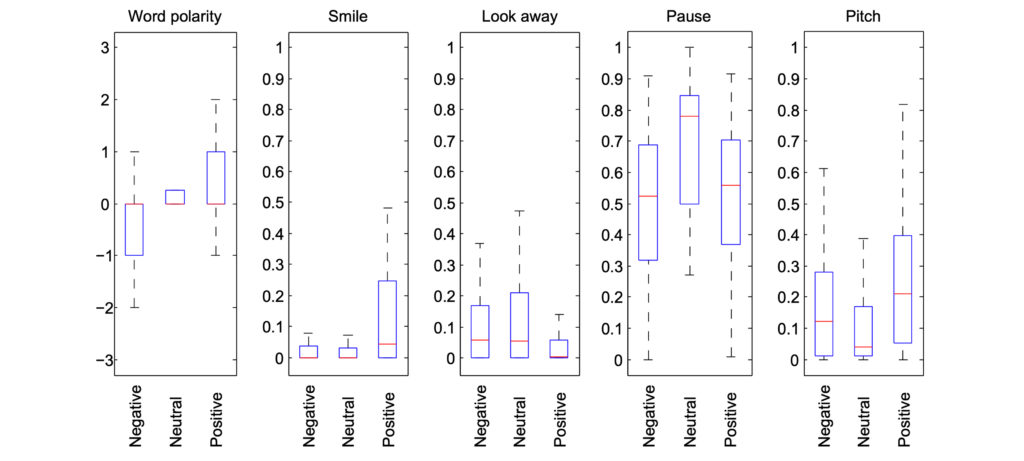

- It identified a subset of audio-visual features relevant to sentiment analysis, and presented guidelines on how to integrate those features.

- It introduced a new dataset of real online data for future research.

The researchers’ dataset was sourced from YouTube to address issues of diversity, modality of sentiment delivery, and ambient noise. It was one of the first datasets of its kind to reflect the real world of video, outside of a lab setting.

“While the previous research on audio-visual emotion analysis used videos recorded in laboratory settings, our dataset contains videos recorded by users in their home, office or outdoor, using different web cameras and microphones,” the authors wrote.

According to Google Scholar, the work has been cited over 300 times.

MENU

MENU