Autonomous vehicles can be fooled to ‘see’ nonexistent obstacles

Vehicles that perceive obstacles that aren’t really there could cause traffic accidents.

Enlarge

Enlarge

By Z. Morley Mao and Yulong Cao

Nothing is more important to an autonomous vehicle than sensing what’s happening around it. Like human drivers, autonomous vehicles need the ability to make instantaneous decisions.

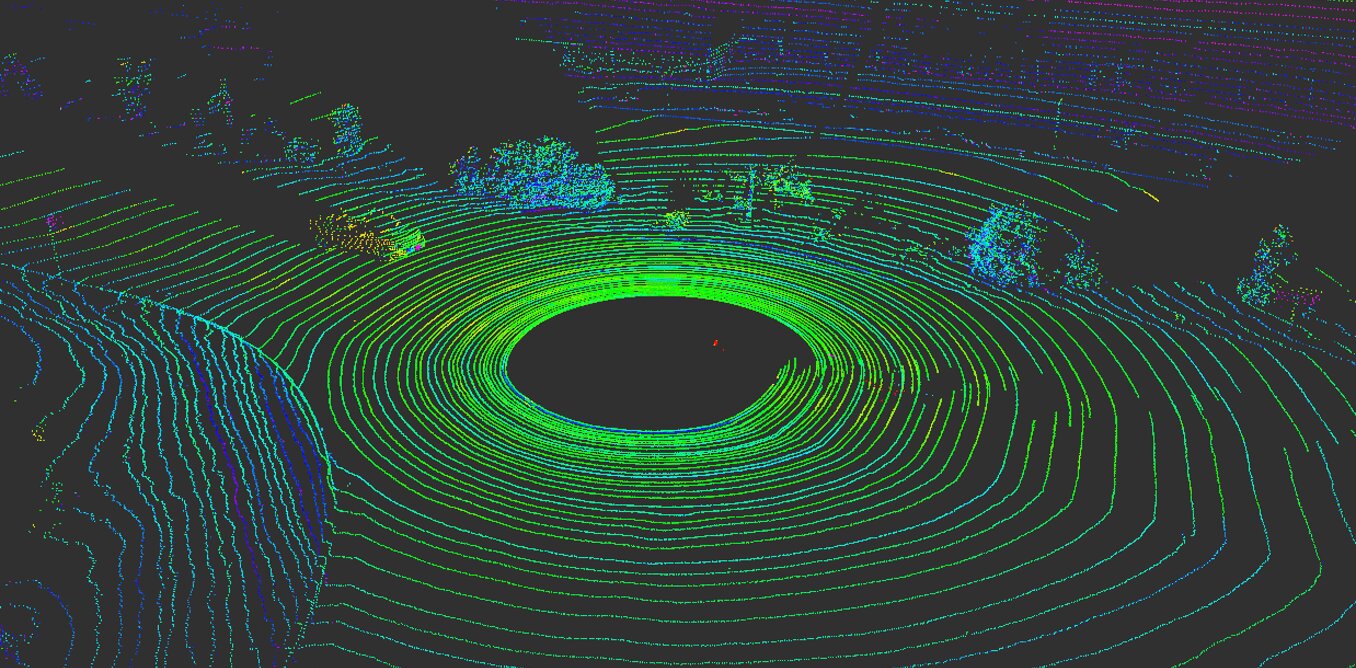

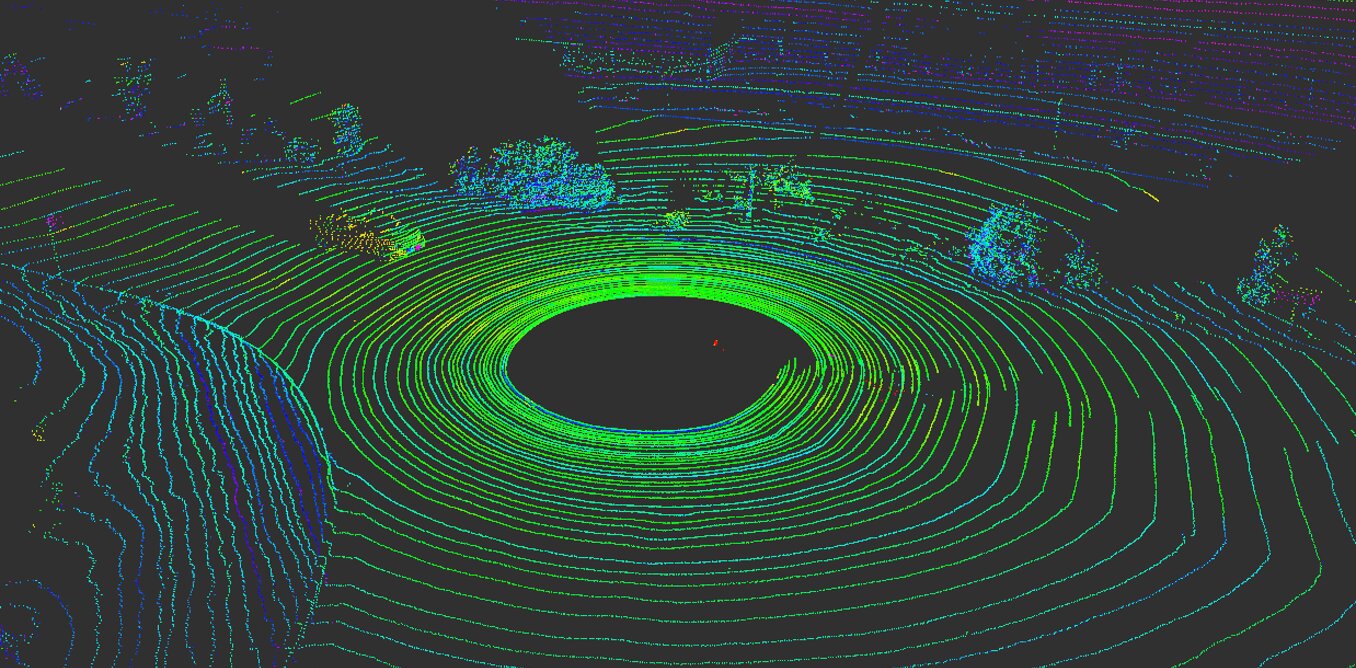

Today, most autonomous vehicles rely on multiple sensors to perceive the world. Most systems use a combination of cameras, radar sensors and LiDAR (light detection and ranging) sensors. On board, computers fuse this data to create a comprehensive view of what’s happening around the car. Without this data, autonomous vehicles would have no hope of safely navigating the world. Cars that use multiple sensor systems both work better and are safer – each system can serve as a check on the others – but no system is immune from attack.

Unfortunately, these systems are not foolproof. Camera-based perception systems can be tricked simply by putting stickers on traffic signs to completely change their meaning.

Our work, from the RobustNet Research Group at the University of Michigan with computer scientist Qi Alfred Chen from UC Irvine and colleagues from the SPQR lab, has shown that the LiDAR-based perception system can be comprised, too.

This article is republished from The Conversation. Read the full article.

MENU

MENU